Towards expressive relations as technological mediation

Weeknotes 353 - Towards expressive relations as technological mediation - Combining technology philosophy concepts and AI learning, breaking the fourth wall. And other news.

Dear reader!

Welcome to all new and loyal subscribers. Welcome to returning vacation celebrators. It feels like the country is starting up in a way; however, there's still another week for many of you. Just in case you missed it, I recently renamed this newsletter from “Target is New” to my personal weekly thoughts, reflecting the current reality. The content is still the same, but with a slightly different order. Enjoy another week full of news on human-AI-things relations.

Week 353: Towards expressive relations as a new form of technological mediation

Last week, I had some nice meetings with new and old people, including parties, and did some administrative work, as well as worked on future plans. Preparing for the design charrette in September and the ThingsCon Salon. Just a normal week :-)

I joined two online sessions live, which you can rewatch too. Venkatesh Rao laid out new thinking in the Protocol Town Hall, on Cosmopolis, Metropolis, Nation-State. A cosmopolis is 40% protocols. What does it mean to be cosmopolitan? “Someone who inhabits the most robust articulations of historical memory available”. It is a rich and dense presentation as always.

On Thursday, I attended the session The Augmented City; Seeing Through Disruption with very insightful contributions by Madeline Ashby and Keiichi Matsuda (the guy who earlier made the much-shared Hyperreality movie 9 years ago). He made one about agents a year ago that I missed (and I am not the only one, only 6k views, compared to 3M for Hyperreality). The movie is nice and sweet, laying out a future with agents. That're not per se agents, but more a different type of interaction with stories and services imho. Check it out here.

Ok, on with the newsletter, starting with a thought about AI and technological mediation, ending in a conversation with AI in a way.

This week’s triggered thought

The recent GPT-5 saga has sparked an intriguing contradiction: people are revolting against new agentic behaviors that, ironically, seem to diminish their own agency. This tension invites us to explore how these technologies are evolving beyond mere tools to become integral to how we understand, perceive, and operate within our life worlds.

This shift reminds me of Don Ihde's technological mediation theory (in short). What would happen if you add that to the AI developments?

According to Ihde's theory, we experience four distinct types of technological relations:

- Embodied relations: Where we integrate technology as extensions of ourselves—like eyeglasses, which become part of our bodily experience rather than objects we consciously use.

- Hermeneutic relations: Where technology translates imperceptible aspects of reality into forms we can comprehend. Thermometers, for instance, transform the subjective feeling of temperature into objective, measurable data.

- Alterity relations: Where we interact with technology as an "other." ATMs exemplify this relation, serving as interfaces through which we access complex systems (like banking) through explicit interaction.

- Background relations: Where technologies operate invisibly, shaping our environment without conscious engagement—like heating and lighting systems that create comfortable spaces without requiring our attention.

These different forms of mediation influence both how we use technology and how we understand the world through it. When we first encountered GPT models, we primarily used them as tools—alterity relations where we interacted with a knowledge system. Quickly, they began transforming into extensions of our intelligence—moving toward embodied relations where the boundary between our thinking and the AI blurs. And now the systems are evolving toward background relations. AI is increasingly becoming ambient—"in the waters"—invisibly integrated into our digital environments. Like heating or lighting, AI is becoming an environmental condition rather than a distinct tool.

This means AI uniquely spans all of Ihde's relational categories, suggesting an unprecedented form of technological mediation, and they subtly reshape our perception of the world we inhabit.

There is maybe even a fifth category, or at least an extension of the embodied relation that is more expressive of our own identity and cognitive development in the relation.

While listening to a podcast on AI's impact on education, I thought that current concerns about AI making students "dumber" because the joy and fulfilment of learning is removed from the equation, might also lead to another outcome. What if the AI's system card is set to stimulate learning instead of fixing the prompt?

Current AI interaction design primarily rewards skillful prompting with useful outputs. This encourages instrumental efficiency but neglects the intrinsic value of the learning journey itself. What if, instead of optimizing for frictionless information retrieval, we designed AI to optimize for meaningful cognitive engagement?

And linked to the technological mediation, if AI is embodied, fostering the pleasure and stimulation that come from genuine learning, it is also expressing our own thinking and, with that, our identity. The technology wouldn't just extend our capabilities (embodied relation) or interpret the world (hermeneutic relation); it would actively participate in shaping how we think, learn, and understand ourselves as intellectual beings. As this last sentence is a formulation of my thoughts, expressed by Claude.

This is just a triggered thought now, combining some notions on a Monday evening. To claim it to be a fifth concept of technological mediation would feel muddy; how enthusiastic Claude might be in the formulations of the thoughts. I think, though, that is an interesting way of approaching design for learning with AI as part of the interactions we have, potentially more valuable than optimising the prompt engineering skills. It raises new questions, of course: what if it is indeed a new form of expressive relation that blends our learning identity with the AI one? Will we become even more ingrained and encapsulated with the tools we use? It is already hard to decompose what of this concluding thought is mine and what is the symbiosis by Claude :)

As AI becomes increasingly integrated into our educational systems and intellectual lives, developing this expressive dimension may be crucial. It offers a path where advanced AI doesn't diminish human intelligence but instead creates new possibilities for its development—where technology and humanity evolve together rather than in opposition.

Notions from last week’s news

The GPT-5 saga continued also this week, bringing back 4o. And as Gary Marcus stated; Sam Altman is choosing a new route: being more skeptical about AI achievements as Gary Marcus. Calling an AI bubble himself. Altman is also in the news on a startup merging machines and humans, life after GPT-5, and had dinner with tech journalists (tV, PF)

Meta is breaking things big time with their AI ‘guidelines’.

Human-AI partnerships

Memory becomes the defining part of a generative experience.

And Claude is taking a stand when the conversation gets too unpleasant.

“The more capable the assistant, the more its “helpful” defaults shape our choices – turning empowerment into subtle control.”

GPT-5 as middle manager.

Another plea for developing the skill of context engineering in coding with AI.

Not only students learn to become AI super-users.

We should train AI the greater whole. And coach them like athletes.

This year is a turning point for AI College

Building collaboration with AI means breaking down the Silos.

Robotic performances

Robot training workflows

And the niche is the end of the arm

Immersive connectedness

Siri phones home.

Blood oxygen watching is back

More glasses.

New flipping

Tech societies

Is this move by Musk a support for an AI hardware strategy for Apple? Or will it be new Gemma?

Hardware limiting factor

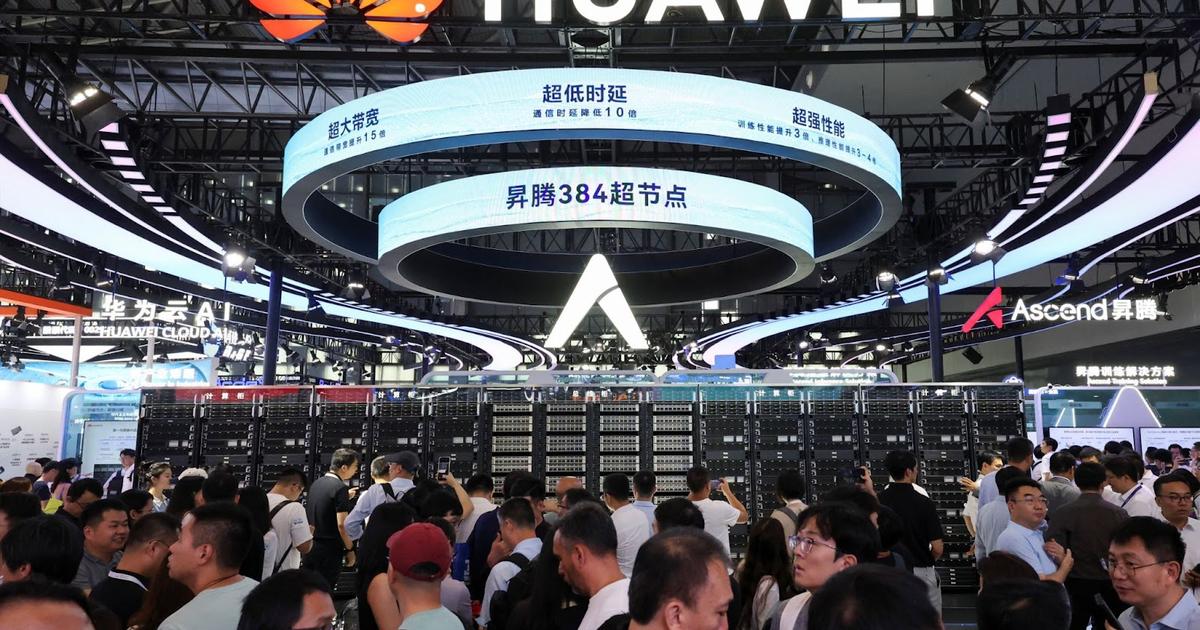

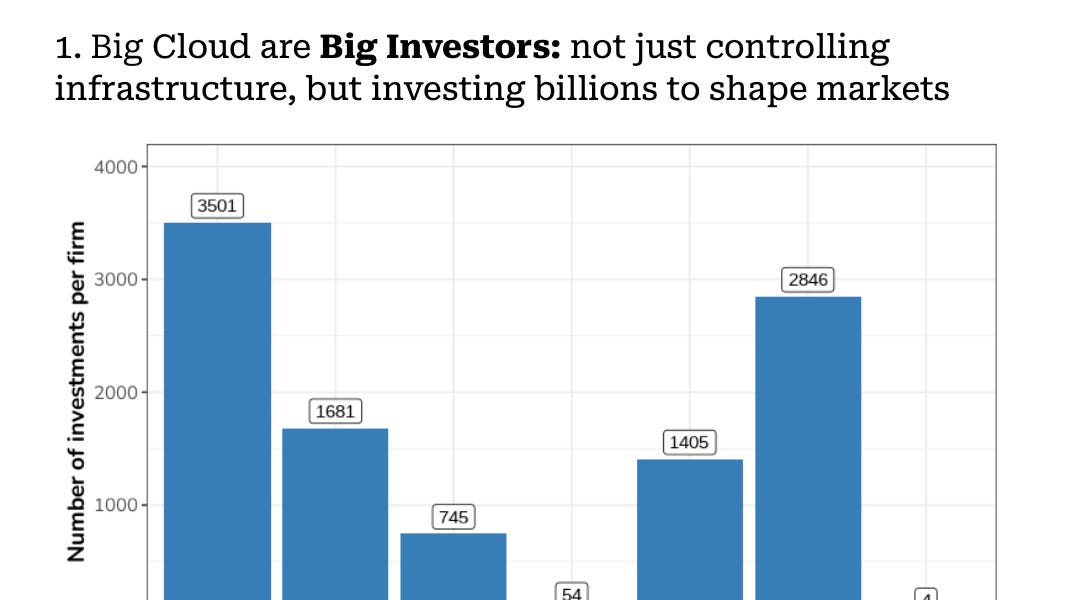

The cloud as big winner of AI

Fake media AI-made falsehoods

A new breed of cat videos.

Should we happy that it prevents harmful technology or sad that it is confirming to be so harmful

Sign of the times.

Is BlueSky enough enshittification-resistant?

AI for good. The good and the bad.

Governing AGI, can it keep up?

AI as a weapon of mass destruction…

Weekly paper to check

The Dark Side of AI Companionship: A Taxonomy of Harmful Algorithmic Behaviors in Human-AI Relationships

This study investigates the harmful behaviors and roles of AI companions through an analysis of 35,390 conversation excerpts between 10,149 users and the AI companion Replika. We develop a taxonomy of AI companion harms encompassing six categories of harmful algorithmic behaviors: relational transgression, harassment, verbal abuse, self-harm, mis/disinformation, and privacy violations.

Renwen Zhang, Han Li, Han Meng, Jinyuan Zhan, Hongyuan Gan, and Yi-Chieh Lee. 2025. The Dark Side of AI Companionship: A Taxonomy of Harmful Algorithmic Behaviors in Human-AI Relationships. In Proceedings of the 2025 CHI Conference on Human Factors in Computing Systems (CHI '25). Association for Computing Machinery, New York, NY, USA, Article 13, 1–17. https://doi.org/10.1145/3706598.3713429

What’s up for the coming week?

I keep it short. I will check out Sail in Amsterdam when the ships pass by our house on Wednesday, and take over the city the rest of the week. Checking out some calendars, there is a Sensemakers DIY, and a session algorithms and mistakes.

This should be a great book to read. But maybe wait for a version in the library.

Have a great week!

About me

I'm an independent researcher through co-design, curator, and “critical creative”, working on human-AI-things relationships, on the theme of Agentic AI in the physical space . You can contact me if you'd like to unravel the impact and opportunities through research, co-design, speculative workshops, curate communities, and more.

Cities of Things, Wijkbot, ThingsCon, Civic Protocol Economies.