Systemic co-design with agentic engineers

Weeknotes 371 - The real shift is not from human coders to AI agents—it's from coding to engineering the environment where agents are co-designers. And other news on AI companion devices and robots as CES.

Dear reader!

With a slight delay. Happy New year! New resolutions for the newsletter. I have been thinking a bit over the holidays, but not ready yet, so I keep it to a hint; I like to make clearer connections with Cities of Things, which is, in fact, my lens for looking into the news. Still thinking about naming, but that is for later. I will add a direct reference to the core concepts of physical AI and human-AI collaborations. More will follow later, probably.

And of course, the news was dominated in the last days of by the execution (or test case) of ‘new’ hinted US imperialism in Venezuela. The world is shifting towards new (un)balances. And a testcase for the opinionated chatbots.

Week 371: Systemic co-design with agentic engineers

One activity to share from last week. I did the testrun of a new immersive museum experience in Nieuwe Kerk in Amsterdam: Botermarkt 1675 from the future Entr-museum.

I am not immersing myself in all the installations and experiences made (like these in Venice), but did the Rise of the Rainbow experience at Stadsschouwburg during Amsterdam Pride week last summer, and it is nice to compare these. Both did a good job of creating mixed reality experiences. In the Rainbow experience, the reality was altered by an extra layer that was smart in how it used a physical grid to frame the digital layer.

In this Entr-version, it was inverted: the basis was a full immersion in a VR world, but you walk alongside your fellow party members, and you see the chosen avatars as part of the experience.

I am always a bit reserved towards VR, as it is often more about showing off the new technology than per se designed as a truly immersive experience. This was done well, though. As a pilot, it was quite short, but long enough. You got really immersed in the moving around. It worked really well at delivering an immersive experience, whereas Rise of the Rainbow was more of an extra layer in the presentation of information. Also compelling.

The story told itself is quite mundane, of course (compared to Rise of the Rainbow and other more artistic projects), but it delivered in immersing. It also inspired my fellow experiencers who are not following these developments at all in thinking about possible use cases. We discussed how it would add to the experience if it responded even more to your input and became more conversational. That brings me to the triggered thought of this week, how immersive will it be to be working in a team of agents?

This week’s triggered thought

The shift isn't from human coders to AI agents. It's from coding to engineering.

Every, the company behind Lex (which I'm using right now), released four predictions for 2026. The one that stuck with me: the rise of the "agentic engineer." A couple of months ago, I wrote about an Austrian developer Peter Steinberger, who described this exact reality—directing a team of AI agents rather than writing code himself.

But calling this "directing agents" undersells what's happening. The agentic engineer doesn't just orchestrate; they design the environment in which agents can work at their best capacity. They build their own tools, shape workflows, and define constraints. The craft moves upstream: from writing the code to engineering the context.

This connects to another of Every's predictions: designers building their own tools. That was what triggered me in the Austrian developer's account was how much of his work involved creating bespoke tooling for his own process. This is what happens when the friction between idea and prototype disappears—designers no longer depend on a coder to test their thinking. They build, they learn, they iterate. Two principles at work: Understanding by doing, and Eating your own dogfood.

And here's where it gets interesting: if professionals are building their own tools, will end users follow? Is every product becoming less an interface to data and more a platform for making bespoke tools? Does a new layer emerge where users shape their own outcomes?

I think this points toward co-design—but a richer version than we usually mean. Not just designer and user collaborating, but multiple layers of expertise are woven into the design process. The economist who maps value flows in a community. The ethicist who flags downstream effects. Stakeholders who traditionally appear only in the research phase are becoming part of the building itself.

In this framing, agents don't just execute—they can represent these roles, simulate options, play out consequences before anything hits the real world. Agents as citizens. Not tools we use, but participants in how we design.

Within Cities of Things we have been looking into human-AI teams for a masterclass on designing these new teams, where this engineering was a key element. Building successful human-AI teams should focus on the relationships among the different human and non-human team members, not on task performance.

Notions from last week’s news

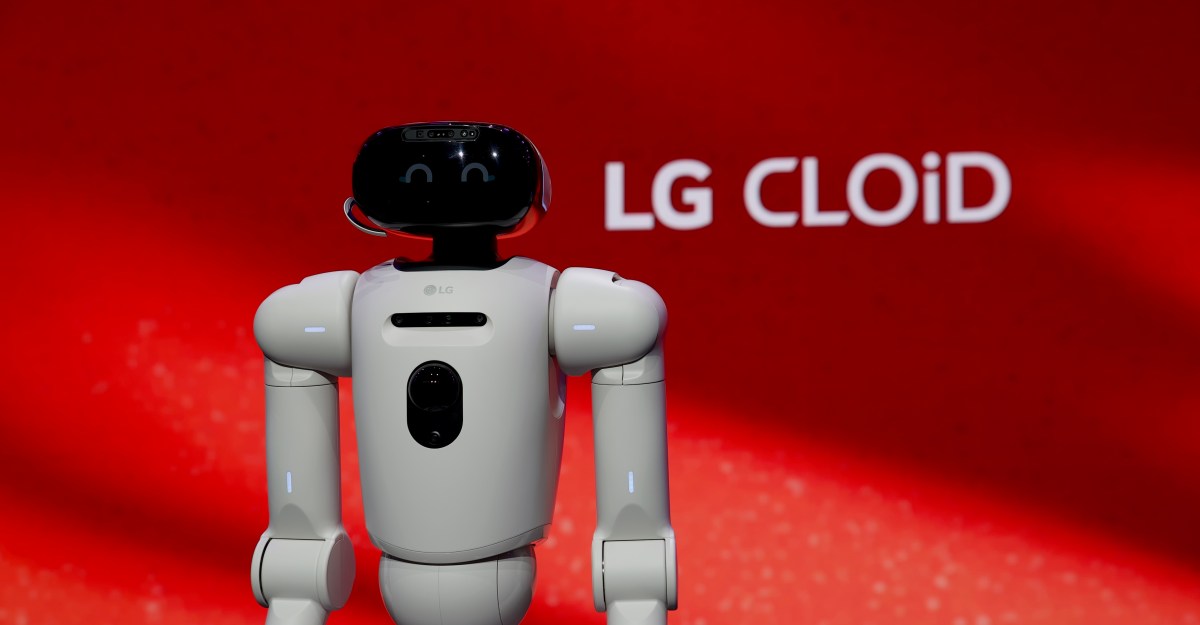

CES announcements are infecting the news. For a part. Humanoids and connected Lego bricks. Next week we know more about what stuck to the wall.

Human-AI partnerships

Reading Gemini as TV platform with image and video generation, it might be called Slop TV instead?

Subliminal companions become physical.

OpenAI is working on a pen, people say. It is not sure. Something with voice embedded can be expected for sure.

Remarkable: Claude Code praised by Google engineer.

Robotic performances

Boston Dynamics drops a vision for Atlas, will it be the first operational humanoid helper, or is this just a response to all the Chinese walking and waving robots?

Check their ‘trailer’ that dropped last evening.

Robot companions to watch your pets… Is that the world we aim for?

The Internet of Things had the smart fridge. The humanoid has the dishwasher operator.

Robot as consumer tech, a myriad of possibilities.

Physical AI as a new frame for feature description in robotics.

Researchers make “neuromorphic” artificial skin for robots

Immersive connectedness

It feels like a no-brainer, but it can be a complicator too. Smart LEGO bricks.

It might be true that the resistance against no-physical buttons dashs are indeed winning. It might lead to generic button form factors to save the cost of bespoke buttons.

Connecting new materials.

Tech societies

Is there AI doom or will humans remain attracted to humans after all.

What are the consequences of big tech aiming for a world without people? Cory Doctorow is exploring. I also have his 39C3 talk on the playlist.

Will there be a time soon that web rot is overtaking dead links; filling the blanks on the web?

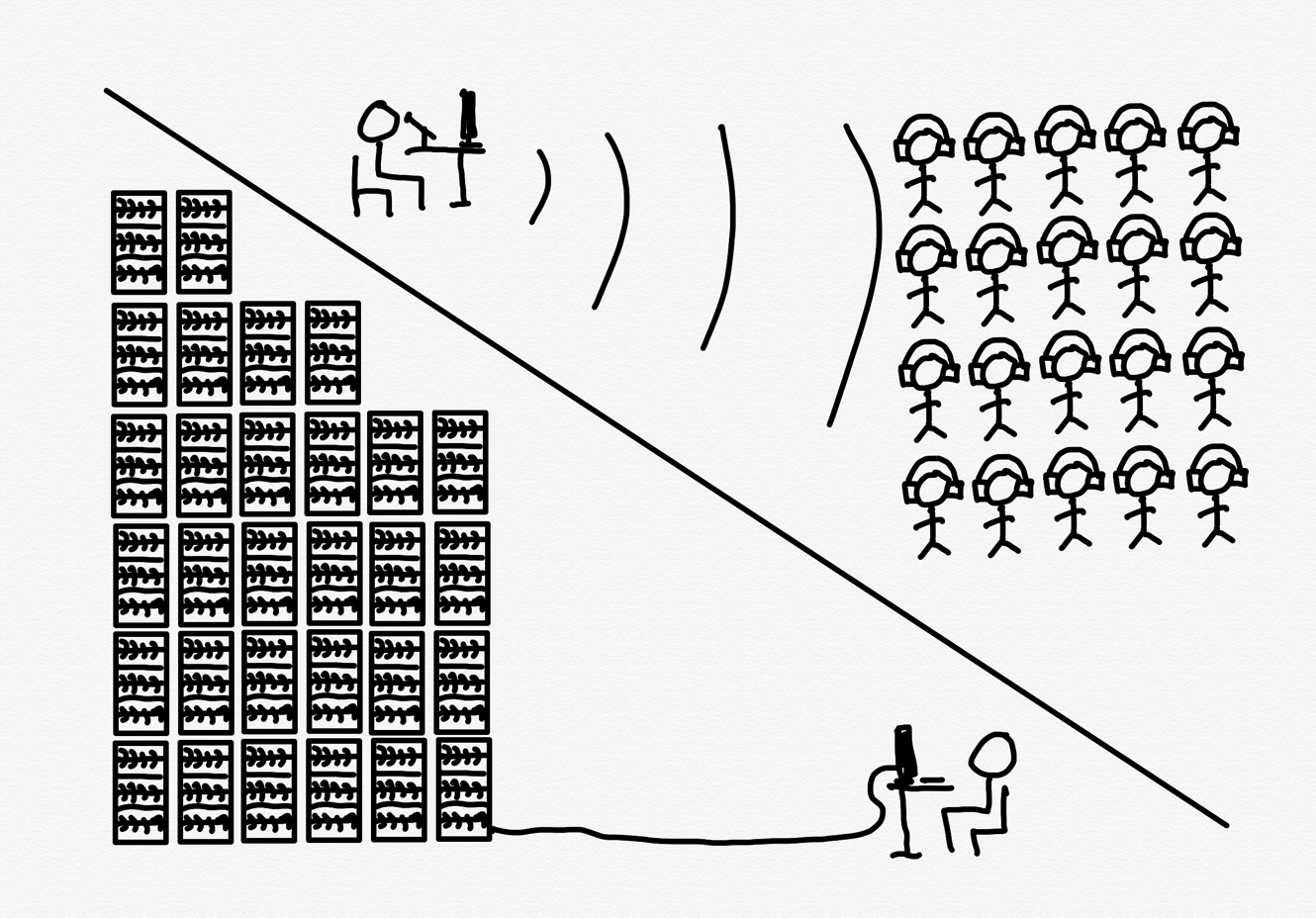

New dynamics in digital: each user is costing

Fediverse futures, with or without Threads

Lean data centers.

Robot operators working from home

Paper to check

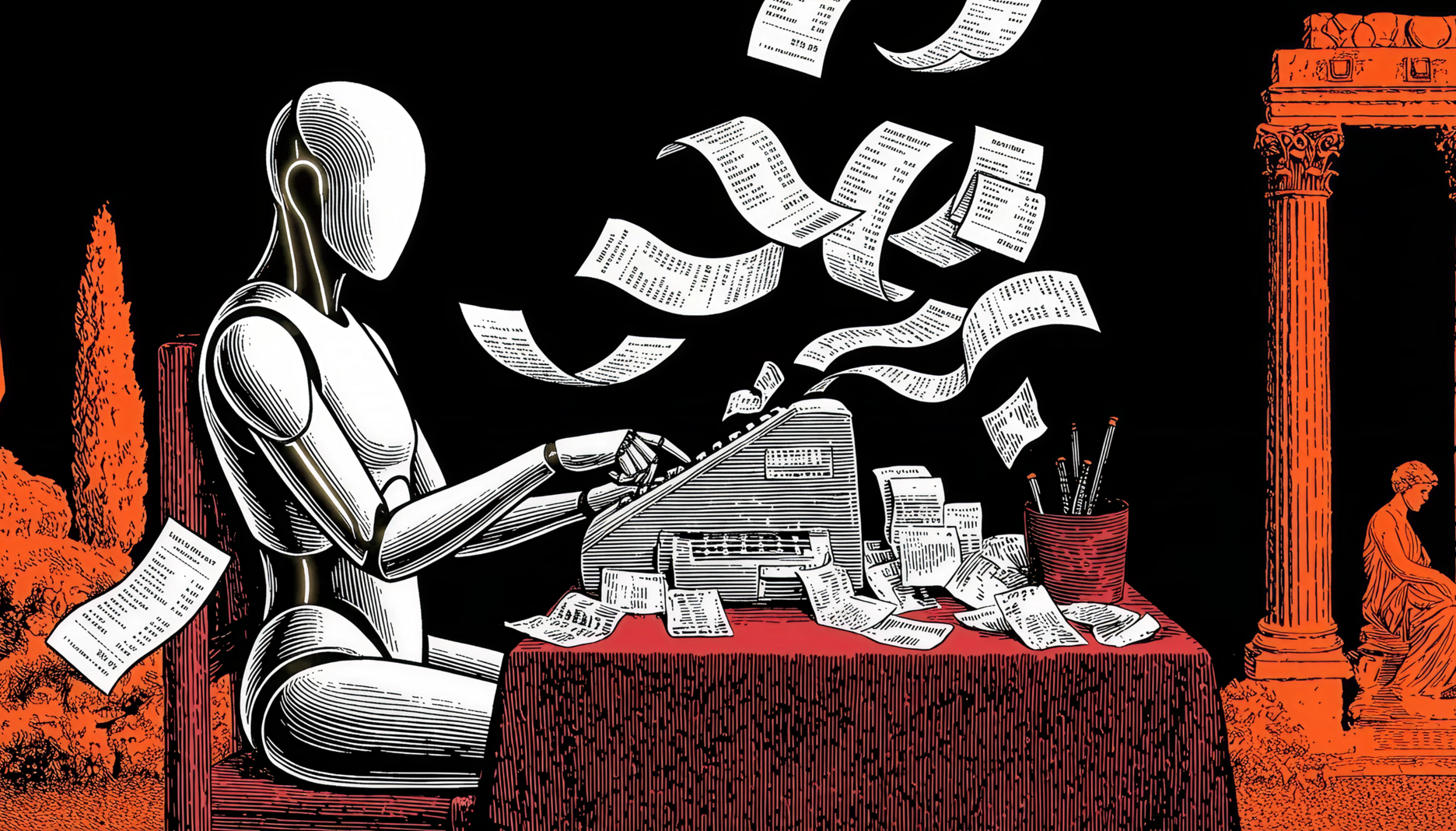

This week, a paper that researches the differences between human and artificial intelligence, supporting the idea of a stochastic parrot.

Tracing the historical shift from symbolic AI and information filtering systems to large-scale generative transformers, we argue that LLMs are not epistemic agents but stochastic pattern-completion systems, formally describable as walks on high-dimensional graphs of linguistic transitions rather than as systems that form beliefs or models of the world.

We call the resulting condition Epistemia: a structural situation in which linguistic plausibility substitutes for epistemic evaluation, producing the feeling of knowing without the labor of judgment.

Quattrociocchi, W., Capraro, V., & Perc, M. (2025, December 22). Epistemological Fault Lines Between Human and Artificial Intelligence. https://doi.org/10.31234/osf.io/c5gh8_v1

What’s up for the coming week?

This time of year, there might be some NY drinks around. I will visit one of the creative industries funds and Nieuwe Instituut, as I do (almost) every year. Also, that evening, a conversation with artist Flavia Dzodan on the illusion of thinking in v2. Unlike with quantum technologies, I can not be in Amsterdam at the same time for the Quantum Game night at Waag Futurelab.

Some things to watch or listen to:

- KSR on real utopian futures

- Cory Doctorow at 39C3

- Fei-Fei Li - What we see and what we value: AI with a human perspective (via Alex)

Have a great week!

About me

I'm an independent researcher through co-design, curator, and “critical creative”, working on human-AI-things relationships. You can contact me if you'd like to unravel the impact and opportunities through research, co-design, speculative workshops, curate communities, and more.

Currently working on: Cities of Things, ThingsCon, Civic Protocol Economies.