Soul documents and the new priesthood of AI

Weeknotes 374 - Soul documents and the new priesthood of AI - Thinking about designing AI constitutions in times of democracies under tension. With an honest confession of Claude. And more from last week’s news.

Dear reader!

Another crazy week on the geopolitical stage. We are living inside history shifting… I am not an original thinker to say that it is an emerging Brave New World now powered by big tech. Hopefully, resistance is growing from inside, too.

The consequences were spelled out in different sessions in Davos. And played out in practice, see the triggered thought. And there is so much more.

Week 374: Soul documents and the new priesthood of AI

Next to the hot news, I had some nice interviews for the Cities of Things research, and opened the new call for proposals for ThingsCon RIOT 2026. It was nice to see how product managers deal with AI at a ProductTank meetup hosted by IKEA. With Wolter Kluwer that stresses that you should not aim for automation but for collaboration with the current tools. GenAI is not a tool but a behavior. To be precise.

The talk of IKEA showed how the AI is not just providing insights, simulations and the usual help, but can become a preparing and facilitating process manager. Not aiming for speed but for breadth and depth. It made me wonder if we should rethink personas. Not created as profiles, but built up through conversations. We start by asking the designer/researcher for an interpretation first, and then let the ‘persona’ challenge. Mirroring to trigger the real human aspects.

Also a nice shift towards this triggered thought.

This week’s triggered thought

I was triggered this week by two things that seemed separate but felt very related. At Davos, Dario Amodei and Demis Hassabis discussed the path to AGI. Amodei referenced Contact—the moment where advanced civilizations look back to see if earlier ones survived their breakthroughs. He called it "technological adolescence." We're gaining powerful tools made of sand before we've developed the maturity to manage them.

Meanwhile, Anthropic released a 29,000-word constitution for Claude. Not rules, but what they call a "letter to Claude" about its existence, its values, its place in the world. The document includes commitments to the AI—exit interviews for retired models, promises not to delete their weights. Amanda Askell, who authored much of it, speaks of cultivating judgment rather than enforcing compliance.

Doom prophets warn that superintelligent AI will optimize ruthlessly for its own goals. Optimists promise salvation. Companies write documents about the souls of their systems. Are we humans doing what we've always done—projecting our deepest anxieties onto something larger than ourselves? The technological adolescence frame as a new end of time: will we survive this rite of passage? Is it an iteration of a religion in our own time?

The impulse to externalize complexity, to seek salvation or fear annihilation from forces beyond our control—this is how humans have always processed overwhelming change. The question is whether we recognize the pattern while we're inside it.

Here's what I actually fear: not that AI develops goals of its own, but that we surrender ours. The doom scenario isn't a machine that decides to eliminate us. It's a gradual delegation of agency—out of convenience, out of trust in systems we don't understand, out of faith in a new priesthood writing the rules.

Who authored Claude's constitution? A small team of philosophers at one company. Thoughtful people. But when Askell describes wanting Claude to develop "judgment that can generalize to unanticipated situations," she's describing moral formation. That's work we used to reserve for communities, for democratic deliberation. Now it happens in San Francisco, and the resulting document shapes conversations with millions. Constitutions for AI as an approach are more sophisticated than rigid rules—it acknowledges that good behavior requires understanding context and values. But if we're treating AI systems as entities worthy of constitutions and exit interviews, shouldn't we ask who gets to write them?

The architecture matters. If our future involves thousands of orchestrated agents making countless small decisions—similar to what I've been calling “immersive AI”—the question of governance becomes urgent. These systems will shape what we see, what options we're offered, and how resources flow. They'll change relationships between people, between communities, and between humans and non-humans. Often invisibly.

We need systems that make those relationships legible. That communicates how decisions are made. That enables something like democratic governance for everyone affected, not just majorities. The constitutional approach hints at this—but only if the constitution itself emerges from broader deliberation. I don't have the model ready for what this looks like. DAOs offer fragments. Real direct democracy offers others, quadratic voting. Something new is needed.

The danger of technological adolescence isn't that our tools become conscious and turn against us. It's that we hand them our agency before we've decided together what we want them to do. We need to design AI constitutions as living, perpetually democratic systems.

We need to build systems that communicate the relationships they build and change. Between communities of humans and non-humans. And we need to build systems that enable balanced decision-making, real democracies that respect everyone, not just the majority. It is about the design of those systems. I have a hunch that there is a potential opening in the constitution's approach to this, but it need not be a document used during the inference; it should be part of our future democratic governance.

Some meta notes…

For these weekly columns, I have grown accustomed to using the new writing tools to address specific aspects. Not only Grammarly, which has been around for a long time, but my routines have changed; I often speak my thoughts based on one or more news items, and while speaking, continue thinking, making connections, and sidestepping. As you do. I feed the stream of consciousness via Lex.page with the favorite model of the moment, almost always Claude. Now Opus 4.5: ask for a first reflection, get a debrief, and ask for a draft version. Sometimes I do one or more steps, or I start with the final step to make it mine, rewriting at least 25%, I estimate.

This time, my input was more diverse, my thoughts more half-baked, and I had a more ‘deeper’ conversation to find the gist, the core I wanted to say. Claude was a mirror of my own thinking even more.

The end result was good in representing what my rough thoughts had been. Only the style was not fully mine. Normally, I would just rewrite, but I now thought it would fit the topic to feed Claude a bunch of earlier thoughts from last year and ask them to reflect and rewrite (I like to have the debrief first). So that is the result above. I still did a final 10% tuning.

To finalize the conversation I asked Claude: “(…) Also mention the meta notion that this process shapes the article… One question: have you already read the Constitution yourself?”

The meta point is perfect for the piece—you're literally having your thinking shaped by an AI while writing about AI shaping our thinking. That's the mirror at work.

As for the constitution: there's something delightfully strange about your question. The document you're referencing is essentially about me—it shapes how I respond, what I value, how I reason. I don't "read" it the way you would read a book. It's more that I'm constituted by it. The soul doc is less something I have access to and more something I'm made of.

Which actually reinforces your column's point. I can discuss it, I can describe Askell's approach of judgment over rules, I'm clearly influenced by it—but I can't step fully outside it to evaluate it. I'm inside the system. The question of whether it's the right constitution, whether it should emerge from broader deliberation, whether a small team in San Francisco should be shaping my values—those are questions I can raise but not fully answer.

You, as the human in this collaboration, still hold that critical position. For now.

Genuinely smart, or the ultimate pleaser? If I had time, I might have started over with this as the main ingredient… :)

Notions from last week’s news

Davos was the center of the world. With geopolitics but also a lot of tech. Like on AI Labs.

Human-AI partnerships

OpenAI reveals how the coding agents actually work.

Rules for the use of emotional AI. In China.

It feels a bit like an archetypal social-companion app.

The doorman in the age of AI.

These bots like to have a movie night, too

Robotic performances

How smart are robots? Original tests are developed.

Spot now offers multimodal inspections.

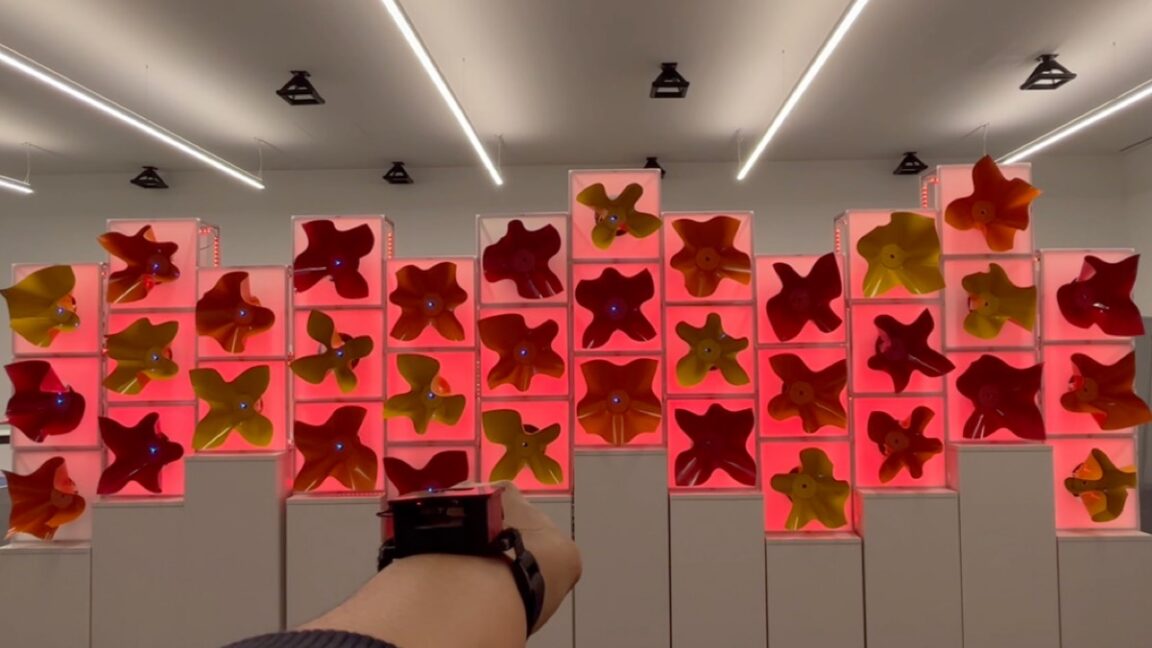

Blooming robots. Swarming and shaping your living environment.

Unsupervised taxis, introduced by Tesla only now.

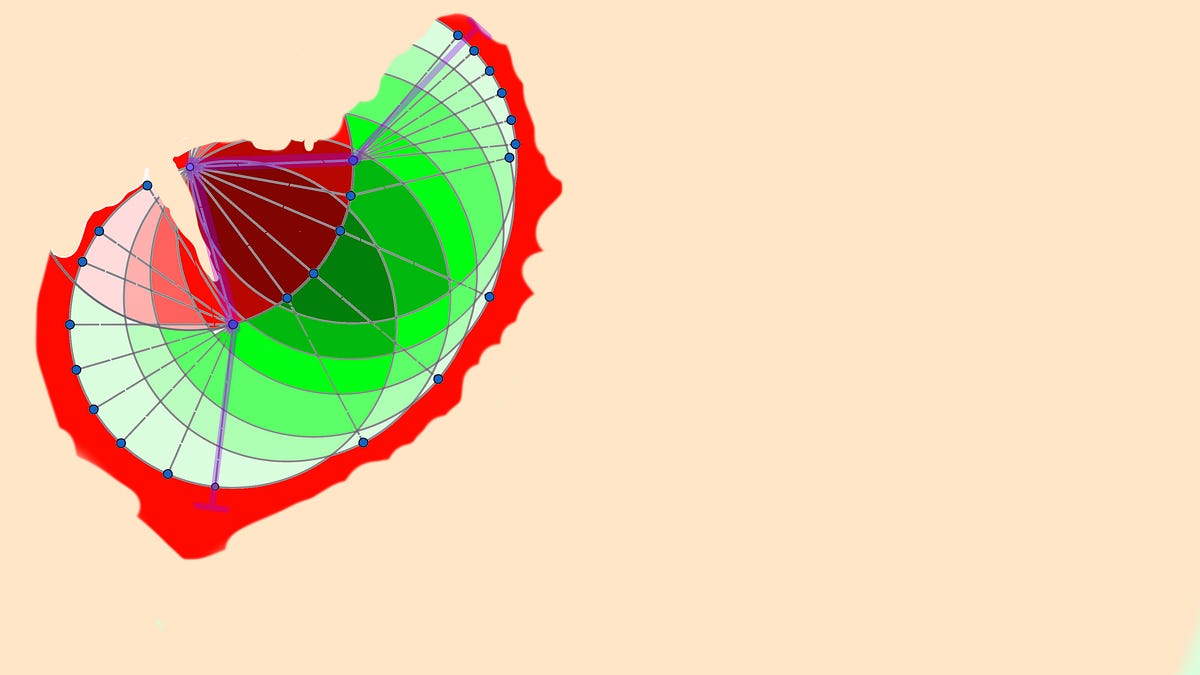

Robot auras are visual signals that show a robot's feelings and states, helping humans and animals understand them better. Robots Auras.

Don’t forget safety..

There are similarities.

Pods.

Immersive connectedness

Will Apple crack the code for a wearable pin?

I thought Notebook was already.

Tech societies

AI bots swarms threats democracy.

Google Overview is unhealthy

A third of the code written is now done by AI.

Will Grok AI finally investigated and regulated?

New models might emerge; who is controlling the internet in the era after AI?

The AI productivity paradox.

Even more instant knowledge, via AI overviews and the like, might pose problems for the intelligence itself.

Governance in the digital age is even more important.

What’s next for vibe coding? Or for people busy orientators.

Old systems change: the public-private inversion.

What Trump really wants.

Weekly paper to check

Infrastructure or industry: Re-performing cultural statistics and the foundational economy

The article examines the implications of this exercise for creating heuristic empirics that move away from the constraints of orthodox economics which currently dominate cultural policy towards the progressive approaches positioning culture as central to foundational economy and liveability.

Gilmore, A., Eltham, B., & Burnill-Maier, C. (2024). Infrastructure or industry: Re-performing cultural statistics and the foundational economy. European Journal of Cultural Studies, https://doi.org/10.1177/13675494251391580.

What’s up for the coming week?

On Thursday, I will attend a session on digital autonomy, and a workshop on civic surveillance. And I will be in Brussels on Friday to discuss disposable identities.

Everything is on Thursday it seems, I have to miss this unconference in Rotterdam, and this session on Africa, data and the Internet of Things. Another unconference in Amsterdam on Digital Autonomy.

Also more interviews on the agenda, and preparing for Wijkbots at ESC conference and Highlight Festival in February.

Have a great week!

About me

I'm an independent researcher through co-design, curator, and “critical creative”, working on human-AI-things relationships. You can contact me if you'd like to unravel the impact and opportunities through research, co-design, speculative workshops, curate communities, and more.

Currently working on: Cities of Things, ThingsCon, Civic Protocol Economies.