Humanity in the Age of AI Co-Performance

Weeknotes 351 - Humanity in the Age of AI Co-Performance - A fresh newsletter this week, with a new look and setup. Covering the same themes and topics. With reflections and captures of the news on human-AI-things partnerships.

Dear subscriber!

Thank you for being a reader of my weekly newsletter! Last week, I reached the milestone of 350 weeknotes, marking 10 years since I started writing them. It remains for me a perfect way to structure my mind, to synthesize the news that is speeding up only more and more since the latest AI b(l)oom.

I first want to apologize to everyone who was confused by my announcement last week. I do not end the newsletter; some readers got that impression from my writing. I adjusted the online version, but for those reading the email version, it might have been confusing.

But there are changes. As you may have noticed, I will no longer use the name Target_is_New. There are several reasons, but mainly some conversations about my own positioning as “an independent researcher through design and creative strategist for human-AI-thing futures”, and the valuation of people to my personal angle here made me decide that it makes more sense to make this even more personal in the branding. So from now on, I use my digital identity, Iskandr, as a name (once made up for my OG social media accounts like Dopplr, Jaiku, and Twitter, inspired by Flickr’s acronym).

I also made some adjustments to the flow of the newsletter. I begin by reflecting on the news, the central theme, and any events I attended, as well as specific insights I gained from last week’s activities. Then the triggered thoughts become more in-depth reflections.

I close the “above the fold” newsletter by sharing some activities and plans. Above the fold is also what I share via LinkedIn.

Below the fold, I start with an introduction to the notions of the news, combined with the paper of the week, followed by an overview of the links. For now, I keep the categories the same, but I might use these vacation weeks to think a bit about the categories as well.

Let me know what you think. I love to hear your thoughts, as always. Is this a good fit with your expectations? Or do you like more attention for my personal adventures?

And of course, I am happy to have conversations about these topics in relation to your organisation or business challenge. Check out Cities of Things for formats of inspirational sessions, speculative design workshops, or in-depth explorative research. Or contact me directly.

PS: I expect that this is a longer newsletter than usual.

Week 351: Humanity in the Age of AI Co-Performance

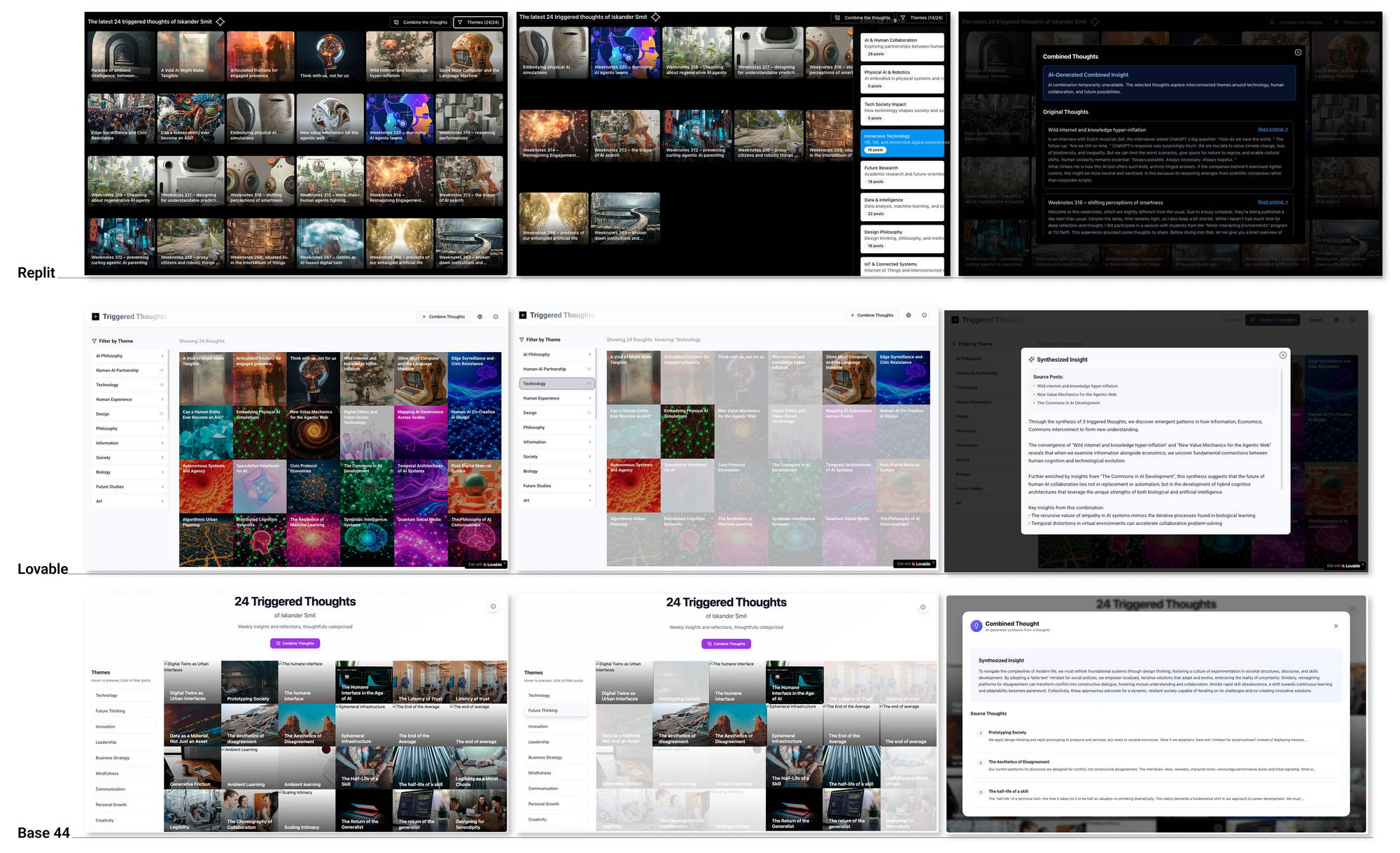

Back in 2023, I did my first vibe coding, in a way, prototyping a dashboard for Structural in GPT3.5. It was partly a success, as it shaped new ideas and directions, but the results were not usable in any manner. In the current wave, I did not find time to invest some real time, but last week I thought it was a good moment to try out some tools, all with the same prompt, to create a different type of landing page to mark 350 editions of Target is New-posts, or more specifically, the last 100 triggered thoughts. I used Lovable, Replit, and Base44, and spent a day having conversations with the tools. I might create a dedicated post, highlighting the insights gained from collaborating with the tools. The TL;DR is that all tools have their own strong and weak points, as expected. 100 posts were too heavy, so I reduced it to 24. As a partner to prototype the design, Base44 felt most understanding. Lovable is the most accessible for creating working demos, but it was not able to complete the scraping tasks. Replit delivered the best overall result, but you need to be aware not to follow the advice for deployment, which would break the bank (at least mine), with possible hundreds of euros a day for basic use.

What I specifically liked was how it stimulates the use of intelligence to incorporate benefits in the creation of things. I wanted to filter out only one piece of content from the source, and that could differ over time. For the best results, a database should be cleaned, but for a quick demo, it is very nice that AI can make a workaround.

And it triggered new ideas. The main function that I initially had thought of as extra for exploring the content was extended to a much nicer one. Get an impression below.

It was a pleasure to be invited to Monique's latest cocktail party, with, as always, a lot of interesting guests. And this one was special as it was linked to the unique experience of the Rise of the Rainbow AR-theater in ITA. The performance was both technically an outstanding achievement, with 80 goggles in sync, and some smart choices were made to tell the story and really let the AR contribute. The inflatable structure created a solid framework, and some clever tricks were employed to cover and reveal elements, blending 2D screens with three 3D objects. What I think was best was the moment where real and virtual dancing came together. There will be another edition in Breda, I think, in October.

This week’s triggered thought

In a recent conversation on "The Gray Area,"-podcast, philosopher Mark C. Taylor discussed his book, After the Human. Taylor challenges our anthropocentric worldview—the notion that humans are separate from nature and each other, a concept he traces back to Descartes. This separation, Taylor argues, has contributed to environmental destruction and social fragmentation. Instead, he advocates for understanding existence through interconnectedness and relationality, envisioning humans as nodes in a "network self" rather than isolated individuals.

I had to think about how this relates to a sketch of OpenAI’s Sam Altman's vision of our possible AI near future (when GPT-7 arrives). He outlined three scenarios for advanced artificial intelligence. The third scenario wasn't the typical science fiction nightmare of malevolent machines exterminating humanity. Instead, Altman described something perhaps more insidious: a gradual, voluntary handover of human agency to AI systems. In this scenario, we don't lose our power to machines; we surrender it willingly, decision by decision, until we reach a point where even the President of the United States (as in the institute, not a specific person) might say, "AI, you take the final decisions now." Not with a bang, but with a bureaucratic whimper.

Of course, there's a certain irony in Altman—one of the "Magnificent Seven" tech leaders developing AI at breakneck speed—warning us about AI risks. One might cynically view this as creating anxiety about the very products they're selling, only to position themselves as providers of the solution. But regardless of the messenger's motives, I think this slow process can play out indeed, even more silently, just by starting to use the tools dominantly, and losing skills to give direction, and with that have agency.

Taylor's "after the human" concept invites us to question our assumption that humans must forever remain the dominant species and primary drivers of our world. That is in a way a possible endgame of the scenario of delegating agency. Perhaps what follows isn't human extinction but transformation—a world where humans exist alongside other intelligent entities, no longer in the dominant position. Is this a future to fear or embrace? Rather than spiraling into anxiety about distant possibilities, perhaps we should focus on how we're relating to AI now and in the coming years.

As with most technological revolutions, the reality unfolds differently than anticipated, yet the fundamental shifts remain. We adapt, technology adapts, and the impact manifests in unexpected ways—sometimes more profound than predicted, sometimes less. The real question is how we navigate toward that future. Do we focus on AI alignment to ensure these systems share our values? Do we create clear boundaries between human and artificial domains? Do we wrest some control from Silicon Valley and Beijing to ensure more democratic governance of these technologies?

The answers aren't simple, but the conversation is urgent. Our relationship with artificial intelligence isn't about technology—it's about what it means to be human when we're no longer alone at the top of the cognitive hierarchy. It's about finding a balance where we neither fear nor worship these systems, but engage with them in ways that enhance rather than diminish our humanity.

Think about how we treated the concept of Singularity. If you were a believer or a skeptic, the question is not so much about a moment when technology is getting more intelligent than us, but how we shape our co-performance. And that should not be defined by the people who are creating the instigators, but rather by all of us. As Taylor advocates for moving beyond the idea of an isolated individual to a "networked self," where identity emerges from interconnections, and intelligence includes "alternative intelligences.

Notions from last week’s news

Human-AI partnerships

As a follow-up to the triggered thought, Cory Doctorow is opposing the habit of trying to change the internet to fit AI, “It is repeating past mistakes of forcing the world to adapt to flawed technology instead of building better tools.”

It feels like the good old question: are they solving the problem or the symptoms?

Different endings.

The Trolley Problem was already part of a game show of Mr Beast. Now also as a video game.

In the back-end, AI is just as impactful on work routines as in the front-end.

Nice overview of different types of AI interfaces, focusing on the relations with humans.

Robotic performances

A gimmick or giving cars the necessary face if they become more active parts of traffic?

I think I mentioned the Microsoft browser last week already, but this is more fleshed out:

Did you try NotebookLM powerpoints (or explainer movies, better said?) Logical step.

How marketing is changing on the asset level may be no surprise. Good example:

The drone war is delivering all kinds of new functions.

Will perfect moving humanoids lose their last possible cuteness?

Learning robots social skills and habits with AI, for more empathic conversations.

Rethinking assembly lines when using swarming robots. Just like that behavior in logistics.

Robots for solar pharming.

Immersive connectedness

Feeding the machines for ubiquitous details.

Ghost is updating to connect to the federated social web. Curious how this will work. Let’s try out!

What we need for an immersive world.

Tech societies

The chilling effect of companies changing rules out of fear.

Selling it as a personal super benefit… Leave that to Mark.

Apple is now (?) open to AI acquisitions, instead of focusing on the best hardware for others.

Is AI bringing back waterfall development processes over agile? Probably not the end-state.

Is this the future of skill development? For humans, that is. And not only for work-related skills.

There is still a wild west happening in content gathering for building the AI tools. What does this mean for the use of Perplexity? And be aware what you share.

Greece is now a leader in digital transformation, apparently.

The next decade is about doing AI, not about knowing.

10 times bigger, 10 times faster. According Anthropic.

And some countering, is there a AI bubble? And do agents live up to their promises?

Modeling life itself. By AI.

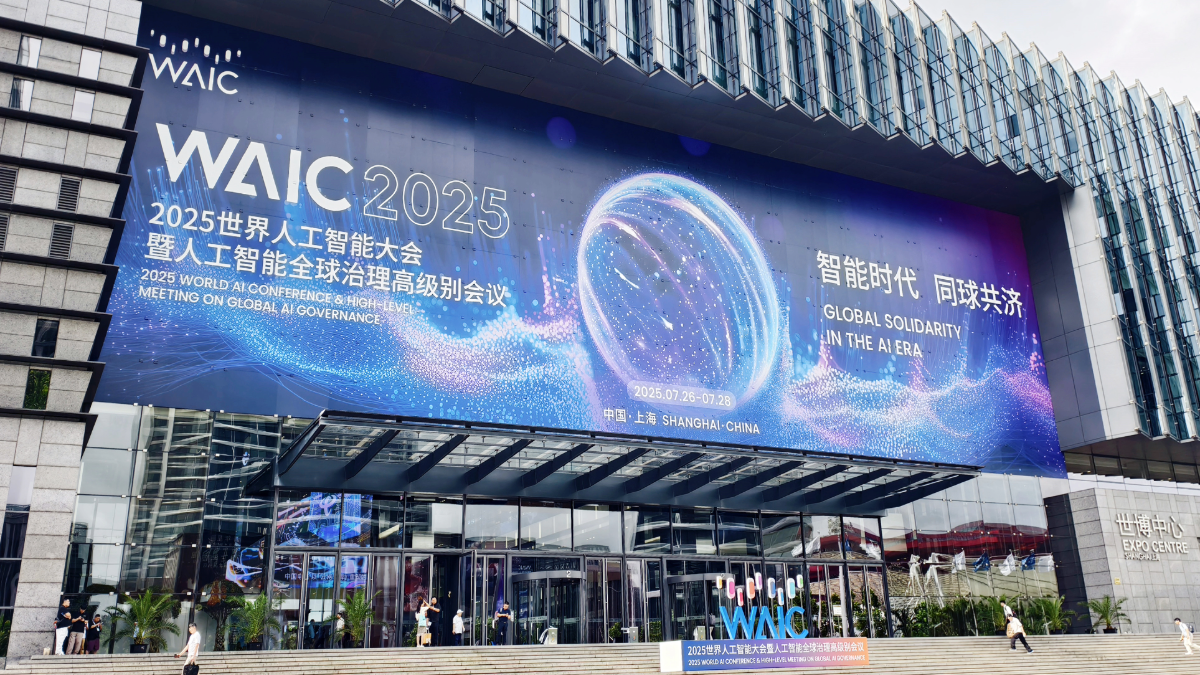

The battlefield of US and China in AI is among others in the approach towards regulation.

Multi-actor Outcome organising, thoughtful thinking.

Weekly paper to check

A different type of paper, a series of papers that is testing the state of prompting behavior.

This is the third in a series of short reports that seek to help business, education, and policy leaders understand the technical details of working with AI through rigorous testing. In this report, we investigate two commonly held prompting beliefs: a) offering to tip the AI model and b) threatening the AI model. Tipping was a commonly shared tactic for improving AI performance and threats have been endorsed by Google Founder Sergey Brin (All‑In, May 2025, 8:20) who observed that ‘models tend to do better if you threaten them,’ a claim we subject to empirical testing here.

Meincke, Lennart and Mollick, Ethan R. and Mollick, Lilach and Shapiro, Dan, Prompting Science Report 3: I'll pay you or I'll kill you -but will you care? (August 01, 2025). Available at SSRN: https://ssrn.com/abstract=5375404 or http://dx.doi.org/10.2139/ssrn.5375404

What’s up for the coming week?

This was a long list of links, so thanks for making it to the end. For me, the vacation is still almost two months away, and I am working on the preparation of the Design Charrette, the design of the program, the artifacts, and production stuff (location, catering, etc.)

For TH/NGS 2025, I mentioned last time that we have set the theme and a location, and preparations are underway. This includes building the rough outline of the program and reaching out to potential speakers and partners. A program for students and connecting with education needs some drafting.

Looking ahead to the last quarter, we're focusing on new projects, which involve creating funding proposals and establishing connections for both short-term and long-term projects. See below :-)

The meetup season is still quiet, but I will do this nice tour. And Sensemakers is doing a DIY session.

And check the ThingsCon Salon in Scheveningen on 4 September.

Have a great week!

About me

I'm an independent researcher through co-design, curator, and “critical creative”, working on human-AI-things relationships. You can always contact me if you'd like to unravel the impact and opportunities through research, co-design, speculative workshops, curate communities, and more.

Cities of Things, Wijkbot, ThingsCon, Civic Protocol Economies.