Emerging from the in-between space of human and machine understanding

Weeknotes 368 - Building an AI Chair, following the recipe by James Bridle, triggered thoughts and links. This and more on ThingsCon, and the captured news of the last week.

Dear readers, dear subscribers,

This is the 16th December edition of my newsletter, meaning that I am indeed a day late than usual. Usually, I post on Tuesday mornings, but due to some final work to clean up and things related to the conference (TH/NGS 2025 that is), literally moving stuff, etc. That's all part of the deal. Let’s quickly dive into it.

Week 368: The Chair That Grew on Me

I wrote a bit about ThingsCon on LinkedIn. It was a great edition imho. What I always like so much about ThingsCon is the mix of people: backgrounds, academics and practitioners, students with their energy, seasoned members of this community alongside new ones.

Matt Jones has a write-up of his talk online. Haque Tan shared a few slides. And find more reports on LinkedIn by Deborah Carter, Daniel Klein, Tom van Wijland, Sen Lin, Yagmur Erkisi, Klaas Hernamdt (hope I did not miss any).

ThingsCon, I think it's really taken off nicely. We had more workshops about making stuff—thinking by making—than in the last two years.

We felt it should be more about the impact of things, things that become part of our personal life—things we make for ourselves, things that have a smaller footprint. What does the concept of regenerative design mean?

We started this year thinking about what would come next after 2023's Unintended Consequences and 2024's Generative Things. It led to the theme resize<remix<regen.

Personally, I had a special experience making an art piece following the recipe of James Bridle—someone I've been following for years. James had a remarkably sharp way of observing the world. Now with a deceptively simple project: have AI design the plan for a chair and see how that works out. I write more about it in the Triggered Thought below.

It was nice to be interviewed on TH/NGS and beyond, in Dutch “Bepalen wij straks hoe we omgaan met onze AI-gestuurde leefomgeving, of laten we dat over aan Amerikaanse tech-bedrijven?”

I decided to enter the SXSW London panel picker, same topic in a way, not sure if it fits that stage :-) Reclaim collectivity in the age of human-AI collaborations.

The open letter by a group of scholars initiated by Cristina Zaga, I mentioned last week, is published too: Thoughtfully Shaping Our Digital Future. Important.

This week’s triggered thought

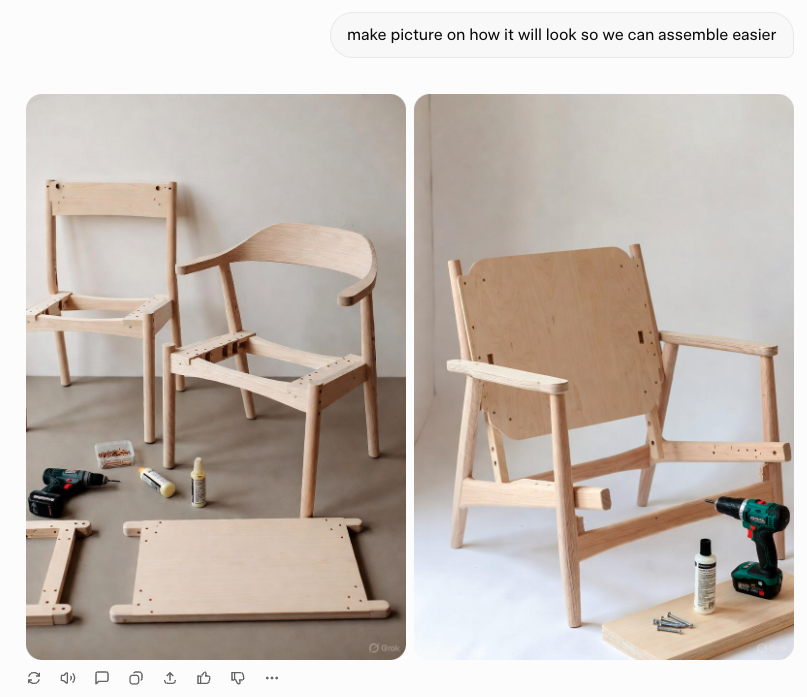

Last week at ThingsCon, we built a chair designed by AI. Or rather: we built a chair from instructions generated by an AI that had no idea what a chair is. The workshop was based on James Bridle's "AI Chair" project—a simple recipe. You feed an LLM a list of scrap materials, ask it for a plan to build a chair, then follow the instructions literally. We used Grok, as suggested by James. It proposed a lounge chair.

The sawing instructions were precise. The assembly logic was plausible. But halfway through, staring at a growing pile of cut wood that didn't seem to be converging toward anything sittable, we asked Grok to generate an image of what it had described. The image looked nothing like the instructions. It wasn't even a lounge chair.

This is the gap that matters. The system could produce—confidently, fluently, at length. But it couldn't understand what it had made. It had no concept of "chair" as something to sit on, no sense of load-bearing, comfort, or human proportion. It had patterns of chair-related language, assembled into something grammatically coherent and practically useless. We finished the chair anyway. It exists. You can sort of sit on it. It's a strange object—part furniture, part evidence.

In a way it relates to the open letter on the needed approach towards AI development. Not "what can we build?" but "what do we need—and for whom?" They use two Dutch words that don't translate cleanly: zorgvuldig (careful, precise, conscientious) and zorgzaam (caring, care-full). They argue for a digital future grounded in people, nature, and democracy—not capability for its own sake.

The chair makes this concrete in a way. Here's a system that can generate, but not understand. That can propose, but not evaluate. That can scale, but not care. Every step of the build required human judgment: interpreting ambiguous instructions, correcting for impossible dimensions, deciding when to trust and when to override. The AI contributed material. We contributed meaning.

This is why the "be as smart as a puppy" principle, coined years ago by Berg London, still resonates. Matt Jones presented about it at TH/NGS. A puppy is limited but legible. It can't do everything, but you understand what it can do. That's a better design target than an oracle that speaks with confidence about things it doesn't understand.

The conference theme this year was "Resize, Remix, Regen." The thinking behind "resize" is not about efficiency—less material, smaller footprint. But maybe it also means scaling to a point where you can still care. Size the system so there's room for you in it.

The chair doesn't work very well as furniture. But it works beautifully as a relationship. I built it. I argued with an AI about it. I'm keeping it.

That might be the point. It makes it a perfect artifact of our current AI moment.

An AI Chair the Grok-edition, following the instructions of James Bridle's AI project. Made by Marco van Heerde and me.

About me

I'm an independent researcher through co-design, curator, and “critical creative”, working on human-AI-things relationships. You can contact me if you'd like to unravel the impact and opportunities through research, co-design, speculative workshops, curate communities, and more.

Currently working on: Cities of Things, ThingsCon, Civic Protocol Economies.

Notions from last week’s news

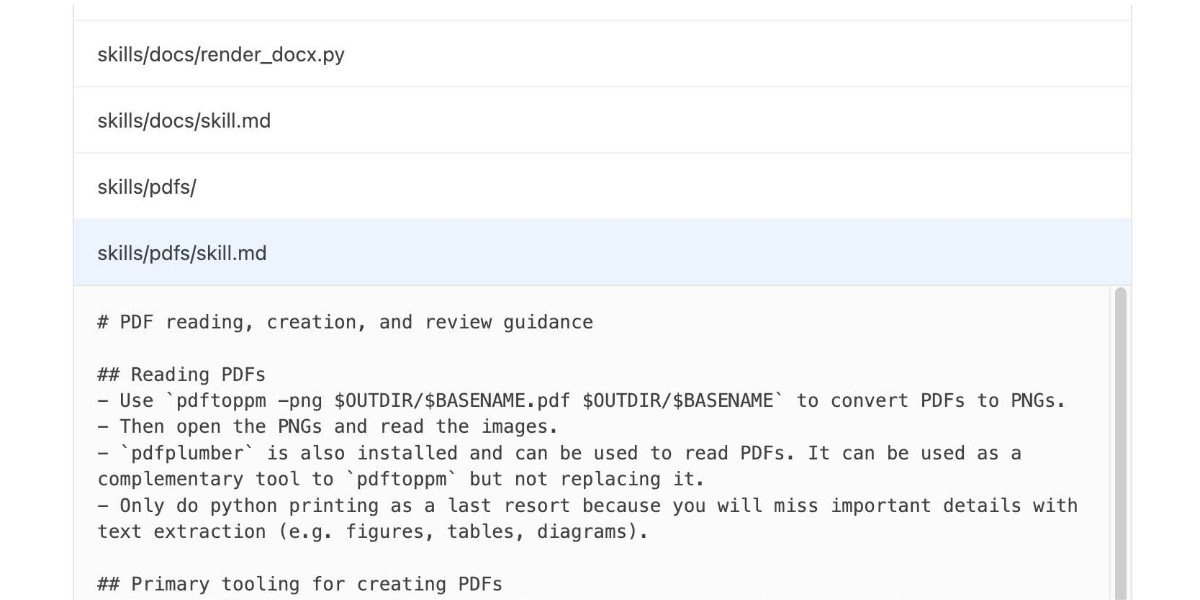

Code Red within OpenAI seems to have ended. With the introduction of 5.2. And also images. And they are adopting skills like Anthropic. It is more agentic ready. Good for fact-checking, is it the end of hallucination?

Google has an experimental AI browser out now. Waiting list.

And the kinda OG of vibe coding has a visual editor. Cursor Browser.

Human-AI partnerships

Consent in the era of agentic AI…

This might be an insightful book on AI agents; read already an interview with the author.

Will the importance of mood increase as devices become more intelligent?

Will these chatbot-powered toys be allowed in Australia?

Robotic performances

City Logistics 4.0 reflects the latest thinking on the future of logistics. Apparently. As is often the case with these scenarios, the future is bright…

Oh dear, an icon in robotic performances at home is going out of business. Might be bought by others.

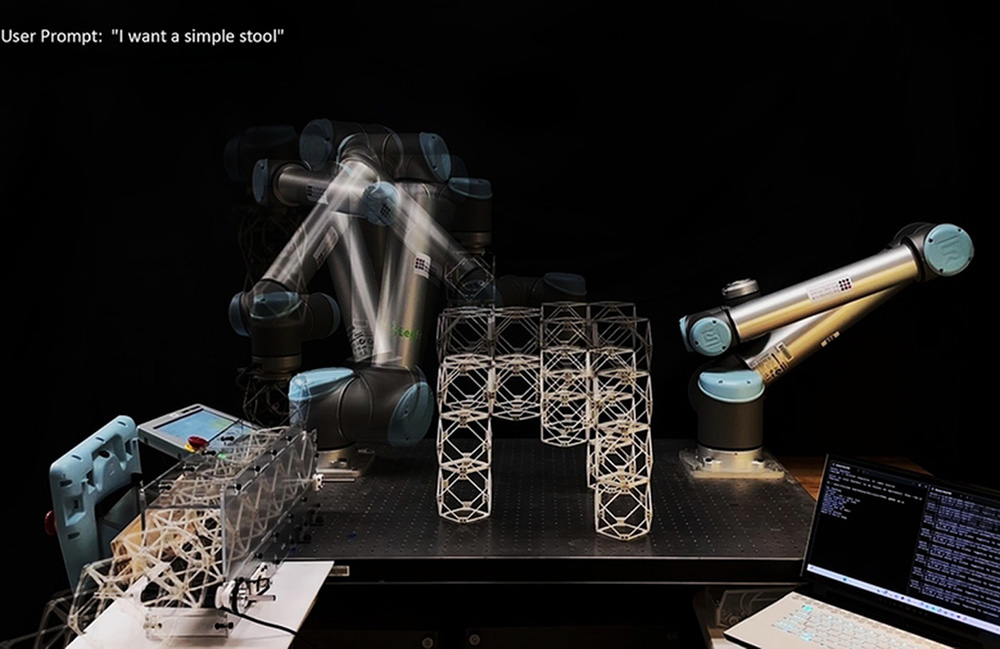

A bit different than the AI Chair, still.

Humanoids need gun control too.

Immersive connectedness

New update of the AI glasses.

Makes sense.

Tech societies

People are surprised Trump is getting interested in AI. It is not so strange, though, thinking of the level of interesting possibilities in the manipulation of power and impact on the economy in the Us. Grok is also making a case.

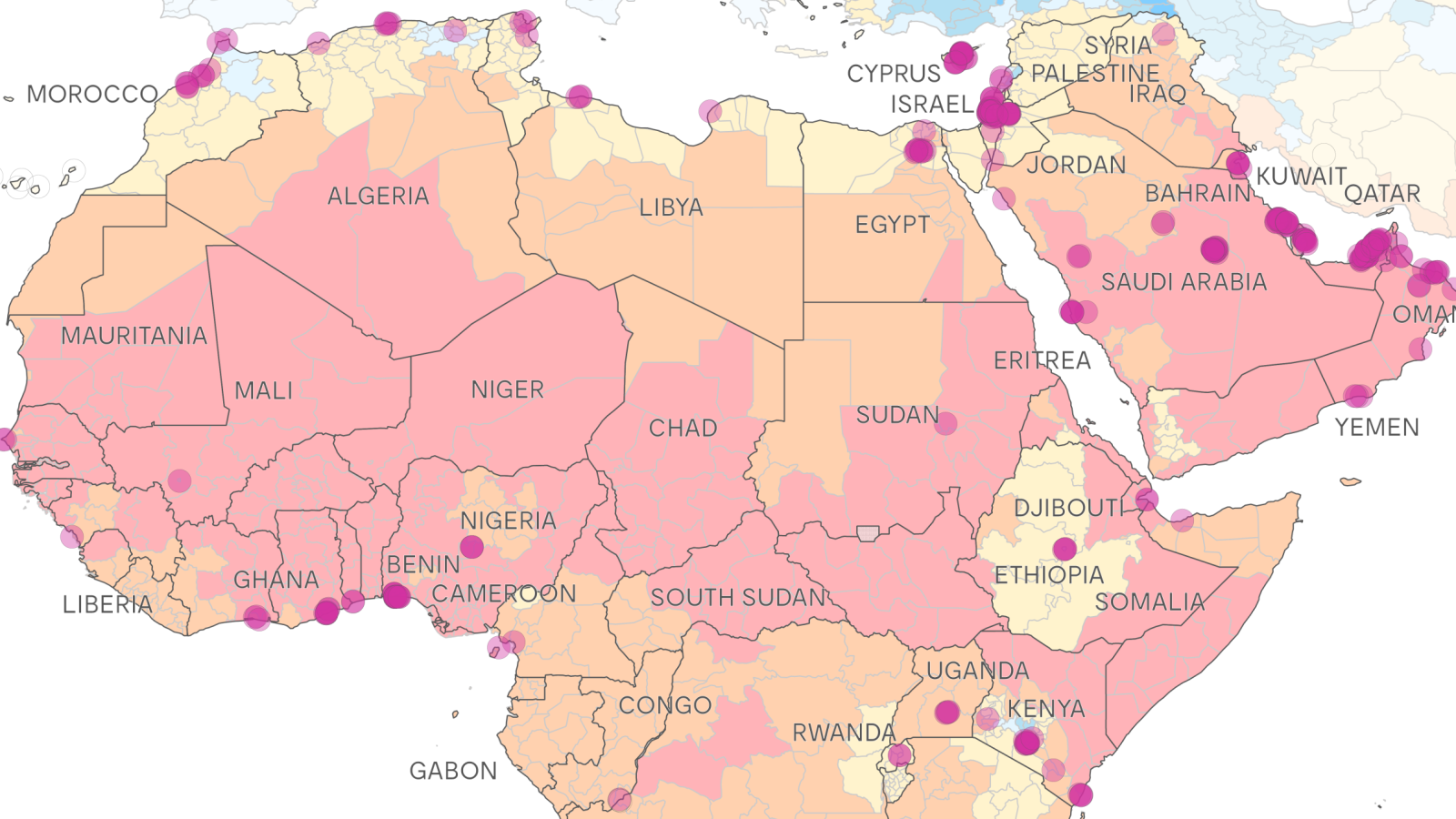

The heatmaps (pun intended) of data centers.

A new type of company is emerging. Feed the models.

A new phenomenon is triggered by the cleverness of the models: rewarding lazy behavior, and even stimulating data hoarding.

People’s compute, and the design and politics of AI infrastructures.

Every new wave of technology disrupting media is triggering new responses, often not the most logical.

Have a view from inside the AI Bubble, beyond practical problems and speculative scenarios, hype benefits elites more than it helps the public.

2025 to 2026

AI Deception and Detection in 2025.

Weekly paper to check

This paper introduces queering as a methodological intervention in human–AI entanglements, aimed at pluralizing the self and resisting normative algorithmic logics. (…)

Our exploration unfolds through Undoing Gracia, an autotheoretical experiment seeded with the first author’s autobiographical memories and values, in which Grace interacts with two digital twin agents, Lex and Tortugi, within the speculative world of Gracia. This multi-agent simulation probes the algorithmic borderlands of subjectivity, as the self is iteratively co-performed and transformed through interaction with the agents.

Turtle, G. L., Giaccardi, E., & Bendor, R. (2025). Undoing Gracia: queering the self in the algorithmic borderlands. AI & SOCIETY, 1-18.

What’s up for the coming week?

This week, I am working on the research “How do they view the role of AI in physical space and embodied AI?” Last week, I interviewed Maria Luce Lupetti as one of the first participants in this research. We did a lot of work together during the first two years, as well as in the workshop format. It was nice to speak with her back in Italy, and she has become a voice for participatory AI, as she frames it. I won't go into details here—this is all part of research I'll continue in January.

I will not attend any events, there are also not so many this week, of course. The traditional XMas Special of Sensemakers AMS tonite. The coming week, this v2 exhibition might be interesting: The Illusion of Thinking.

I'll try to put together a newsletter next week, but since I'm away for the weekend, it might be in a different format. But on the safe side, expect one around the 29th of December. Have a great week(s), and happy holidays.