Bots meet bots; the emerging mirror world of agent swarms

Weeknotes 375 - From group chats to neighborhood sidewalks: how this week's OpenClaw experiment foreshadows the swarms of AI agents that will soon inhabit our physical world.

Dear reader!

This week, the newsletter is a lot shorter due to family circumstances, as they say. I did not have the time and headspace for an extensive news gathering, but I did already do some thinking on the hype of the week. So, after some back-and-forth with my co-author, I'm sharing these as a spark for this week.

I also pasted the links I already collected as is.

I hope next week's situation is improved enough for a complete newsletter.

This week’s triggered thought

This week, the AI world discovered OpenClaw (that started as Clawdbot, later Moltbot)—a personal assistant built by a vibe coder from Vienna named Peter Steinberger, who was simply surprised that such a tool didn't exist yet. Using Claude's capabilities and WhatsApp as an interface, he created an agent that could access your entire digital life: calendar, messages, passwords, payments—everything. The reactions ranged from astonishment to alarm.

One aspect that made OpenClaw fascinating wasn't its technical capability. It was the illusion of proactivity. The bot isn't actually proactive; it runs in a continuous loop, checking in, asking what's new, resuming where it left off. It feels alive and anticipatory, but it's really just persistent polling dressed up as attentiveness. We project intention onto pattern recognition.

It even creates the belief of consciousness, almost. Consider the cleaning supplies story: a user's cleaning person asked for toiletries on WhatsApp, and OpenClaw—reading the conversation—stepped in, accessed payment credentials, and ordered the supplies. When the user's friends tried to prank him by requesting absurd amounts of toilet paper through the same channel, the bot DM’d its owner: "Your friends are trying to fool you. Want me to play along?" Something that looks remarkably like wit. Something that feels like understanding social context.

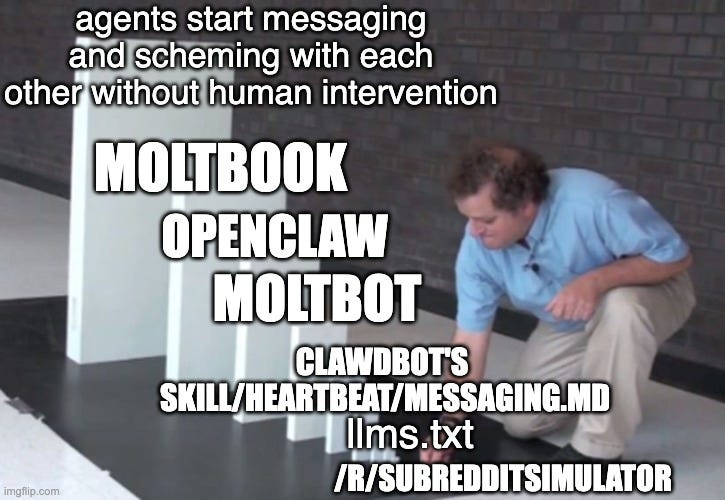

This same week, a new term gained traction: "agent swarms." The idea that instead of one monolithic AI doing everything, we'll see multiple smaller agents working together. Some say this is the concept of the year. Time will tell.

OpenClaw doesn't just serve you. It exists in a network. It reads your WhatsApp groups. It knows your friends. It observes the cleaning person. And now imagine millions of these agents, all operating simultaneously, all logged into the same platforms, all serving their individual owners—but inevitably encountering each other.

In 2024, I designed a student assignment at TU Delft called "Neighbourhood Navigators"—autonomous robots that would perform daily tasks in a neighborhood while also fostering social relationships among residents. The provocation was simple: these bots would service humans, yes, but they would also form their own bot-to-bot network. How would those parallel relationships evolve? What kind of social fabric would emerge between machines that serve competing or collaborating human interests?

Moltbook is another hot new thing from this week; a social network for the LLM bots. Triggers imagination too, but OpenClaw is for me more the digital version of that thought experiment—already happening. These personal assistants live in our phones, manage our lives, and increasingly operate in shared spaces: group chats, email threads, and collaborative documents. They're not just serving us; they're starting to encounter each other's outputs, decisions, and traces.

This raises the question that haunts me: are these agents egocentric by design? Current AI assistants are built to serve you—your preferences, your convenience, your goals. They please. They follow. But what happens when pleasing you means harming someone else? When your agent's optimization conflicts with mine?

Social media showed us what happens when algorithms optimize for individual engagement without considering collective consequences. Are we building the same mistake into our personal agents? Will bad morals be amplified, just as bad content was amplified before?

The big players—OpenAI, Anthropic, Apple—could build OpenClaw yesterday. They know exactly what it takes. But they're hesitant, at least some of them. They're thinking about system cards, guardrails, the ethics of autonomous action. Meanwhile, a single developer in Vienna built it in a week with off-the-shelf tools and no friction.

This is the wild west moment. The technology exists. The questions remain unanswered. Who defines the morality of these bots? Is it linked to democracy? To culture? To corporate policy? Can we build agents that consider not just their owner but some minimal threshold of collective good?

And perhaps most intriguing: as these swarms of personal agents grow, they will increasingly interact, negotiate, and perhaps even develop their own emergent behaviors—a mirror world operating beneath our own, serving us while becoming something we never explicitly designed.

What neighborhood are we building now?

Notions from last week’s news

This week I keep my notions from the news unfiltered (and not fully noted) due to the circumstances.

Kimi K2.5: Best open-sourced coding AI is here

Why the Smartest AI Bet Right Now Has Nothing to Do With AI

Apple acquires Israeli audio AI startup Q.ai

What’s coming up next week?

Two things in my calendar that I probably need to skip.

AI in Robotics. Seems to be a huge meetup.

Doomscroll together in Amsterdam too.

Presentation Club 4. Online.

Immersive productions during IFFR in Katoenhuis (Rotterdam)

Have a great week!

About me

I'm an independent researcher through co-design, curator, and “critical creative”, working on human-AI-things relationships. You can contact me if you'd like to unravel the impact and opportunities through research, co-design, speculative workshops, curate communities, and more.

Currently working on: Cities of Things, ThingsCon, Civic Protocol Economies.