AI's stochastic nature and our interface confusion

Weeknotes 357 - A short newsletter announcing a vacation break and short thoughts of the last weeks.

This is a short newsletter to update you on the reason why you will not receive any normal newsletter today and the next two weeks, and you also missed one last week.

To start with the latter, we organized the Civic Protocol Economies Design Charrette last week, Monday to Wednesday, and including preparations, it was hard to complete the newsletter. I started to look back at the Apple event, as follow-up to newsletter 356 (Building the RealOS through hybrid intelligent layers), and also had a draft for the triggered thought. I like to share these here today.

The design charrette was very fulfilling, with a great group of people, inspiring speakers and food for future thinking and research (through design) activities. One of the participants Viktor Bedö made a lovely reporting Linkedin-post, check it out here. Read also the report of Julia Barashkova.

Why I will not be posting for the coming weeks is because of a three-week vacation. I am now on a train from Oslo to Bergen, where I will embark on a ship for almost two weeks. I will be back for the Society 5.0 Festival, to host a workshop on 16 October, sharing some of my impressions of the design charrette, amongst others.

As hybrid realities merge physical and digital spaces, we face a critical question: who controls these new environments? Current AI development prioritises individual convenience and corporate profit over collective well-being. This workshop explores how communities can reclaim agency in designing human-AI collaborations that serve the common good.

And of course also on time for the next ThingsCon Salon on 29 October. Tessa and Mike will organise a great workshop on maintaining good intentions in the smart city.

Finally, we opened the early bird registration for TH/NGS 2025 (12 December), after we sharpened the theme RESIZE < REMIX < REGEN.

Looking back at the Apple event

I've been thinking about Kevin Roose's take in the Hard Fork Podcast on the recent iPhone event. What struck me was his observation about how Apple might be foreshadowing more wearable devices in the coming years. The design choice to place all the computing power of the new iPhone Air on that plateau seems intentional - almost as if they're preparing us for a future where the intelligence component becomes separable. It was a take that was repeated by several others.

Looking at it another way, perhaps they created this larger plateau specifically to establish this modular concept. The intelligent elements could become something like a mobile puck - the heart, engine, and mind of the phone - while the rest is essentially battery, screen, and I/O devices. It's reminiscent of the Fairphone philosophy but with Apple's execution.

If they continue down this path of modular design rather than maximizing space optimization, we might indeed see a separate intelligence unit next year that connects wirelessly to various accessories - headphones, glasses, or other wearables. And the foldable screen. This aligns with what seems like a possible path for years: the thinking component becomes the true device, while everything else serves as an accessory.

Week 357: AI's stochastic nature and our interface confusion

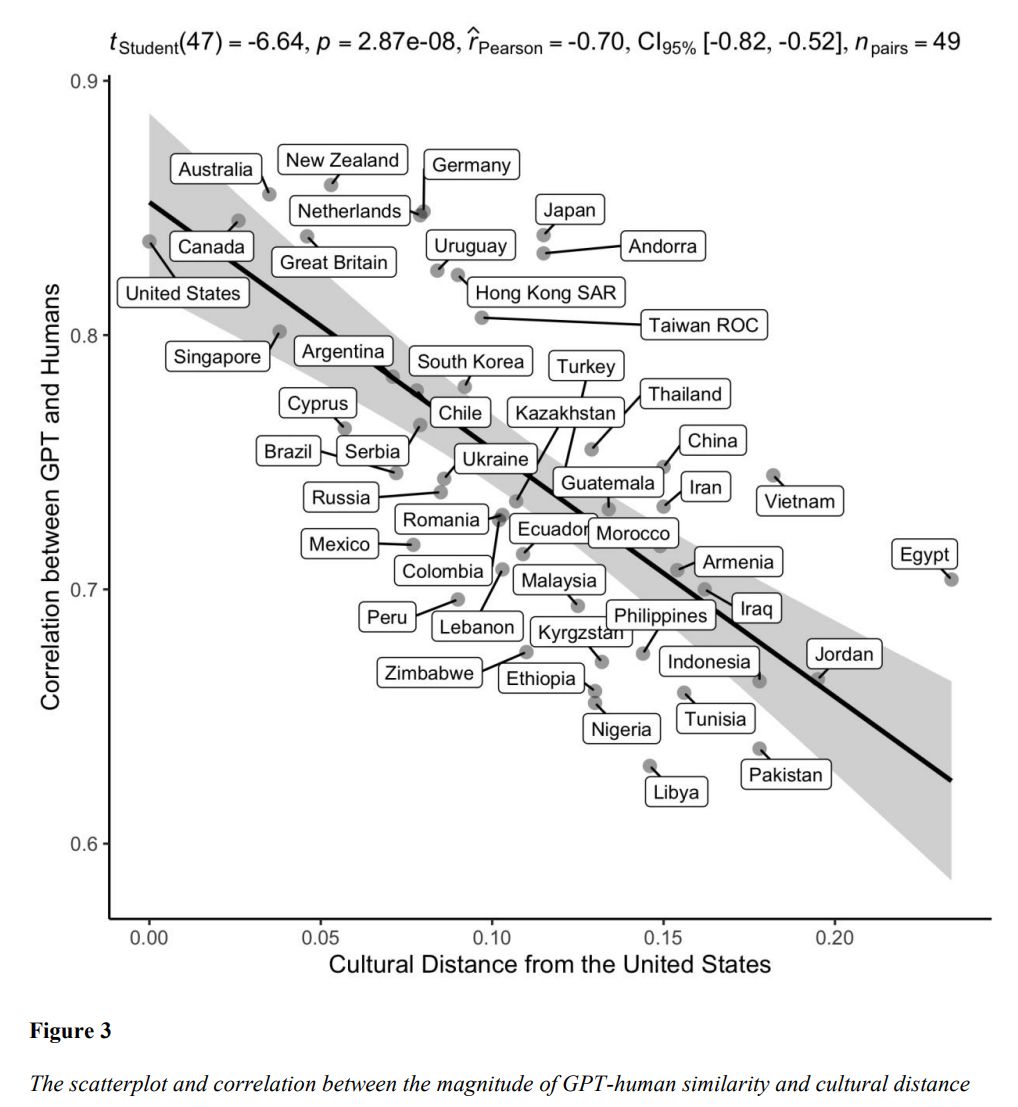

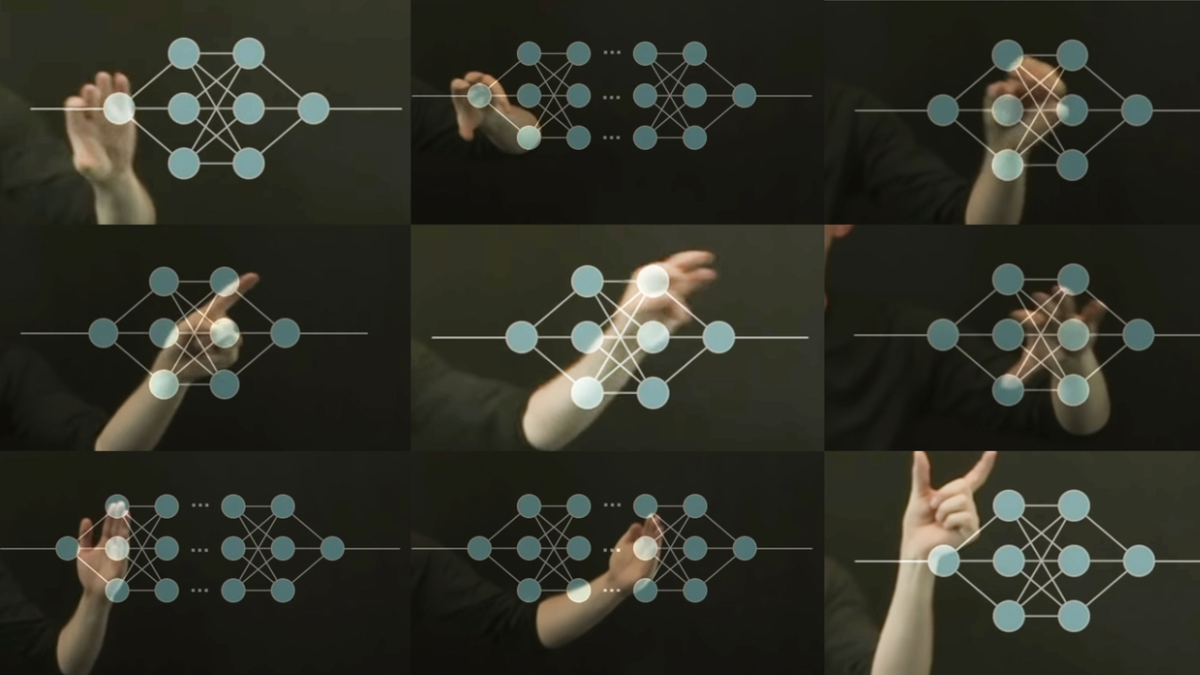

Another thought that's been triggered by a recent AI Report podcast discussion: the fundamental misunderstanding of stochastic predictions is not only causing hallucinations but potentially enabling a new computing paradigm that's more generic and human-like in its thinking, versus the traditional strict, database-driven approach.

What's particularly interesting is how these different paradigms are being mixed in our interfaces. Google Search, for example, now combines AI's "generic thinking" capabilities with the traditional precision-focused search results. This hybrid approach is causing confusion as our tools oscillate between providing specific answers and operating with creative uncertainty.

The way GPT-5 routes queries to different specialized models based on the question type is essentially pre-loading this concept into our systems. While OpenAI is primarily focused on the generic, creative aspects (while trying to limit hallucinations), the real strength may lie in this triage capability between different types of thinking.

As users become accustomed to tools that dynamically choose between different models for different tasks, we're being educated about this hybrid world. We're learning that we need to combine different tools for comprehensive understanding, and that some friction in the system actually helps us understand its limitations and capabilities.

The challenge ahead isn't just improving performance in either hallucination reduction or creative thinking - individual scores in these areas may already be sufficient. What we really need is a trustworthy self-reflection system within AI, allowing it to recognize when it's hallucinating. Like someone with mental health challenges who struggles to recognize their own hallucinations, AI needs that meta-awareness to evaluate its own outputs.

Perhaps the solution involves different types of AI conducting peer reviews on each other's work, creating a system of checks and balances that mirrors how humans collaborate to verify information and catch errors.

Notions from the news of the last (two) weeks

When I return, I will review the main news events, as well as the ones that occurred during my vacation.

Like the new Meta Glasses. They indicate an in-between phase of physical AI, subtitling the real world, indicating also how this “agentic AI for the physical world” is becoming key step in our human-AI-things relations (the current theme of Cities of Things). The Glasses are also another try in that specific product category of wearables, as an archetype of smart wearables. The quest for finding the use case for these devices. I think this might still not be the right one, but it can learn a lot about what we need. And what not. Ross Dawson invited me to the podcast Human+AI, and we spoke on the needed friction for valuable relations with AI, and more: you can find (and listen) here via humansplus.ai (episode).

Enjoy your weeks and see you in October!

PS: these are some of the links I captured, shared here without the usual contextual thoughts: