A quiet rise of bottom-up device agent swarms

Weeknotes 376 - Another lens on agent swarms (or teams), as a bottom-up movement of computational devices iterating into agents. And more reflections on the news of last week.

Dear reader!

Luckily, this week, the unexpected family circumstances, a health condition of a family member, have been normalized. I took a lot of time daily visiting the hospital, but getting back to almost normal. Let’s dive in last week…

Week 376: a quiet rise of bottom-up device agent swarms

I had some more interviews for the research on “State of Cities of Things”. A research endeavor that I will continue until the beginning of April, when I plan to have an event to share my findings. I have to say that I am very happy with the insights from the interviews until now. Looking forward to the ones planned, and I have some new invitations still in the works that I need to follow up.

Additionally, I was able to join “AI in Robotics” at the AI on the Amstel meetup. A panel, some demos, and a lot of attendees. Read the report by the organiser, I liked his focus on having an event on the real application of AI instead of just consulting about it. And great to see how much interest there is (I estimate about 300 attendees). My 2 cents, next to that, a panel always makes it harder for me to focus on the stories told. The three panelists all focus on robotics in a warehouse or on a building site. Which helps. The AI part mainly involves creating and enhancing the robotics learning process to optimise workflows. It made me think about how there might be differences in learning strategies, comparable to the differences between school, uni, and PhD, for instance. But that is more a follow-up thing to dive into…

I also joined a short online webinar on Futures Design, organized by RCA. It discussed the role of futures design and designers. Confusing overlapping terminologies from futurist to strategist. Better focus on the desired outcomes that the methodology labels. Some quotes:

- 90% of design work = creating conditions for design work to happen

- Use of AI risks “cognitive debt” - having answers without doing the thinking.

- Visual design creates dangerous over-promising

- Designer value shifting to process bookends:

- Asking better critical questions upfront

- Taking liability/responsibility for final outcomes

- Social problems never solved, only “resolved over and over again” (1973 planning theory)

Let’s jump into the triggered thought. The thought(s) of last week deserve some more angles as the hype of OpenClaw and Moltbook is still real. It was one of the interviews that triggered this thinking.

This week’s triggered thought

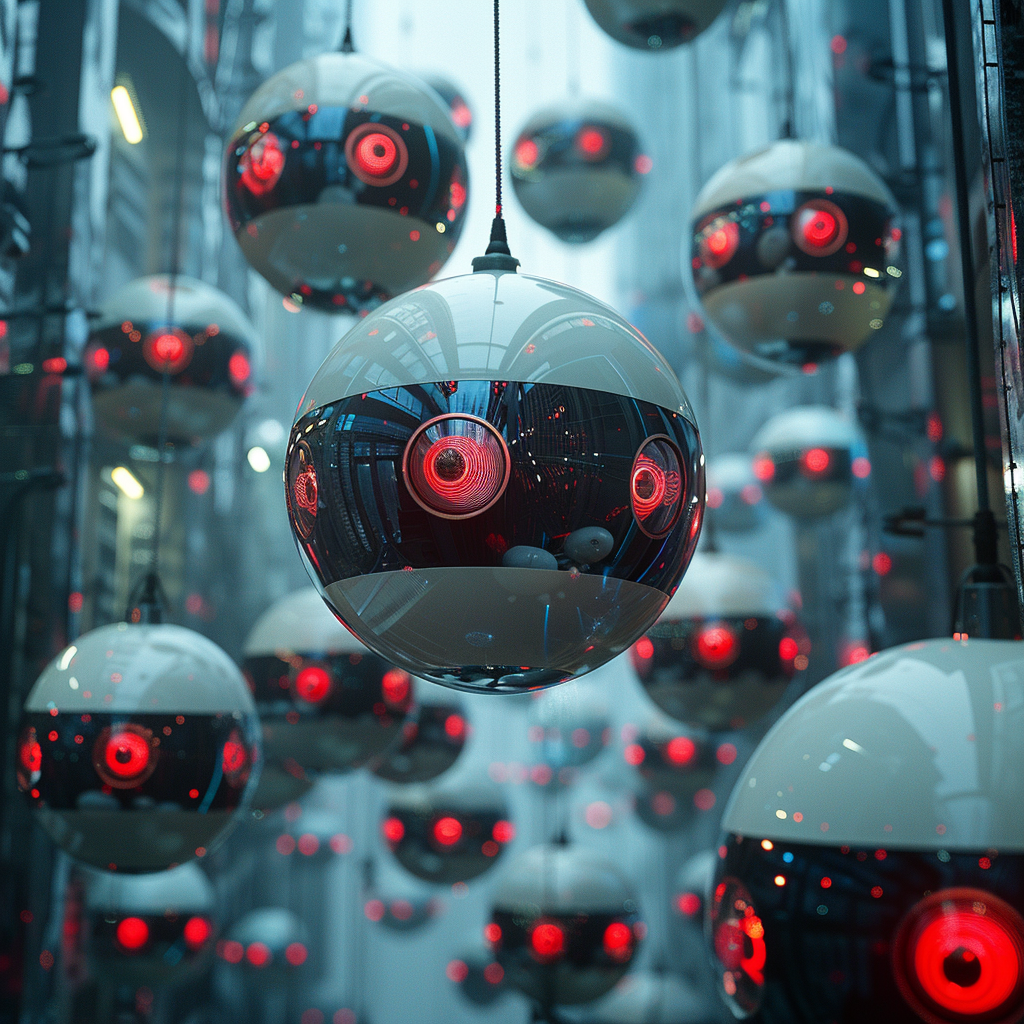

Last week, I wrote about agent swarms and the new tools enabling AI agents to work together in teams—OpenClaw, Moltbook, and the latest Claude releases with their "delegated teams" capabilities. I want to offer a different angle this week. What if the more interesting development isn't building agent swarms from scratch, but watching them emerge bottom-up? Not in our software products, but within the things we use.

Think about your home. Right now, it likely contains multiple devices with computational layers: thermostats, washing machines, vacuum cleaners, lighting systems, solar panels, smart plugs. Not all intelligent, sometimes ‘just’ computing. The Nest thermostat has long been the poster child for the category—a device that learned your patterns and made small decisions on your behalf. Most of the connected devices have been disappointing as smart devices, remaining mere gadgets. But they already have the infrastructure for more.

Let’s frame it ubiquitous AI—not new devices, but existing devices gaining intelligence in steps. The computational layer is already there. What happens when these chips evolve? When they become aware of themselves, aware of others, and begin forming their own connections?

I've been thinking about this in the context of our Civic Protocol Economy work. We refer to this example of the a floating-house neighborhood in Amsterdam: each home has batteries, heating systems, and solar panels, all interconnected. One shared connection to the external grid. Inside this micro-network, neighbors balance energy consumption in real-time—if someone needs more power, they can buy it from a neighbor with surplus. This already works as a connected system. But what if all these connected devices became intelligent actors?

The distinction matters. We can imagine agent swarms as something we build deliberately—deploying teams of AI agents into the world to accomplish tasks together. That's what Moltbook is trying to show, and Claude Opus 4.6 is introducing with the agent teams for software. We can also imagine agent swarms emerging organically from the computational fabric that already surrounds us. Your solar panel starts negotiating with your neighbor's battery. Your washing machine coordinates with your energy system to run when power is cheapest. Your blinds talk to your heating system. No one built this swarm from the top down; it assembled itself from the bottom up. It is an AI agent team with embodied capabilities.

This bottom-up emergence seems more likely than the spectacle of worldwide agent networks starting religions or forming economies from scratch. The mundane version—a small ecosystem of connected devices in your home, quietly organizing themselves into something cooperative—may be the real story. Curious if there will be emerging bigger quests that inspire the teams. Lowing energy use by their human actors, that feels like good cause. Or do we need about a new paperclip saga?

I leave the thinking through scenarios and potential consequences for another week. Do we need to understand who is the one with human intentions. Like some people want to buy human proof systems like World Coin (of course also following an old pattern: offering a solution to a problem you created yourself). And more interesting; how to develop design patterns for securing common interest and societal respect build in from the core and not as an afterthought (the ethics paragraph).

So it is very interesting to think about when people's bots meet other people's bots. But what happens when our things become bots, and those bots meet each other? The agents might already be in our homes. They're just waiting to wake up.

Notions from last week’s news

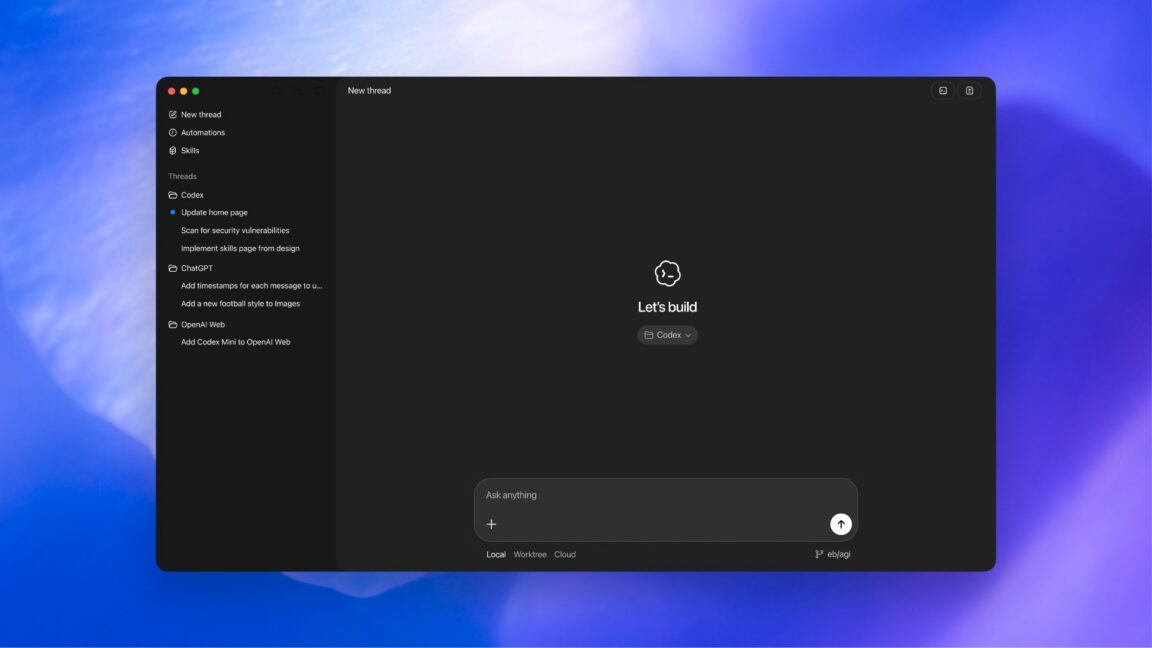

Two new frontier models, or versions of them, that are grabbing attention: OpenAI 5.3, with more focus on coding tool Codex, also as a tool for more than code, and Anthropic Claude Opus 4.6, which focuses on the capabilities for agent teams. And more.

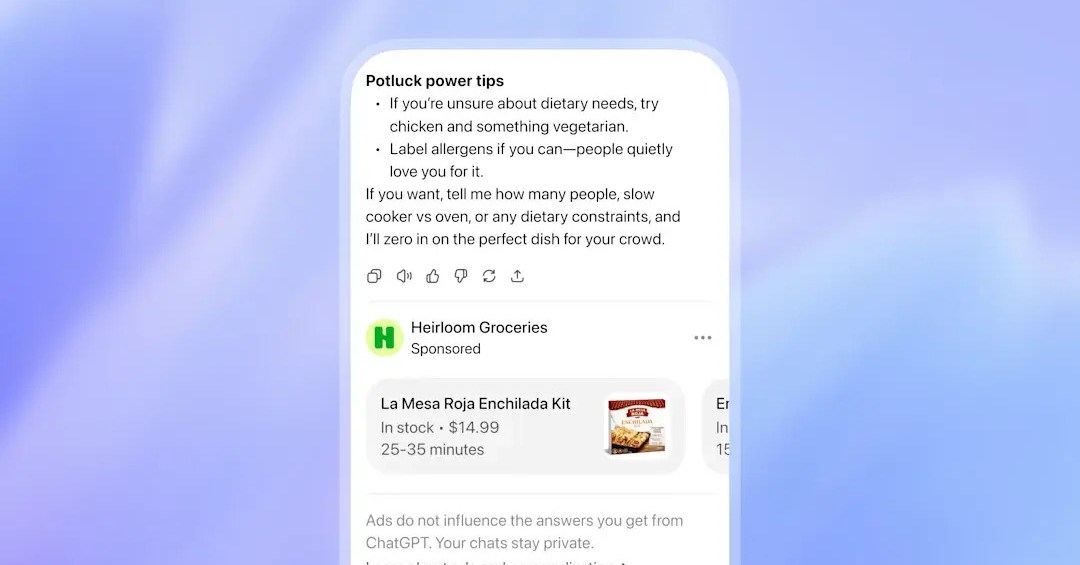

And in the meantime, ads have arrived in the free tier of ChatGPT.

Human-AI partnerships

Our relations with agents keep buzzing, or better said, the relations of the agents with each other. When will we have the first agents juice channel?

In the end, we buy stuff. Or we let stuff buy itself.

Will we have a collective AI psychosis as Suleyman is stating?

Designing for multi-agent architectures. Don’t blame prompts, it might be bad coordination.

Design a workflow for thinking.

Games teach transferable skills. To humans and AI alike. Every did an experiment.

Robotic performances

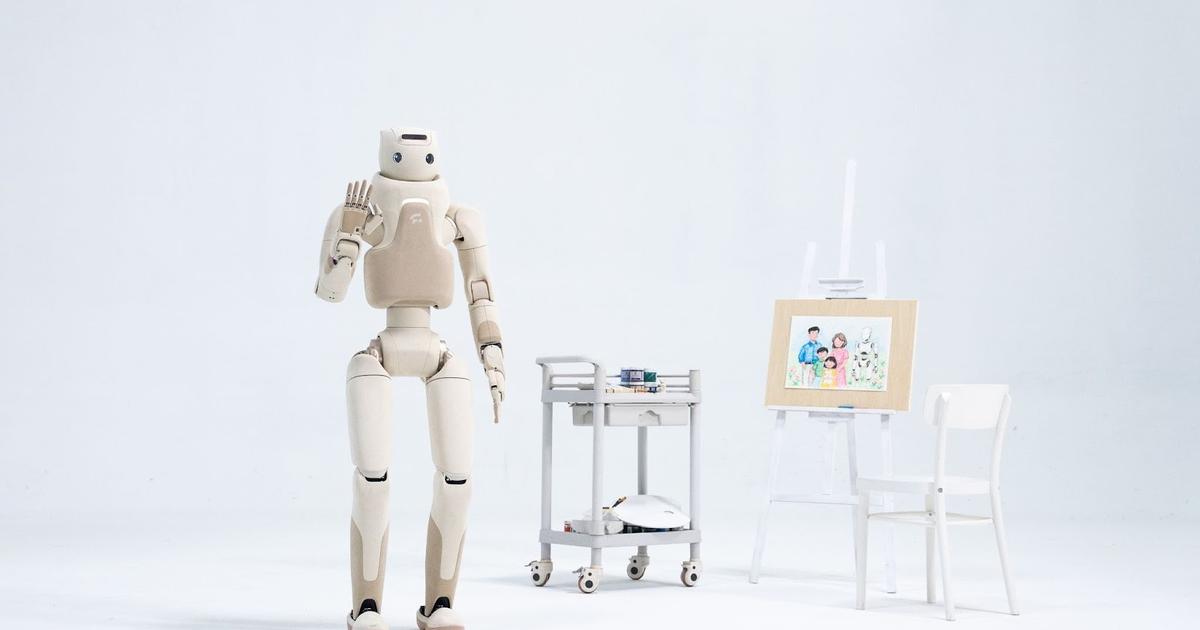

Humanizing the machines, finding the right expressions for humanoids.

Will China do the same overtaking with humanoids as it did with EVs?

Not only self reproducing robots, but also self printing?

And orchestrating humanoid fleets might be the next thing to outsource.

Immersive connectedness

In other news, connecting is not a given.

Feel the insecurity as an immersive noise.

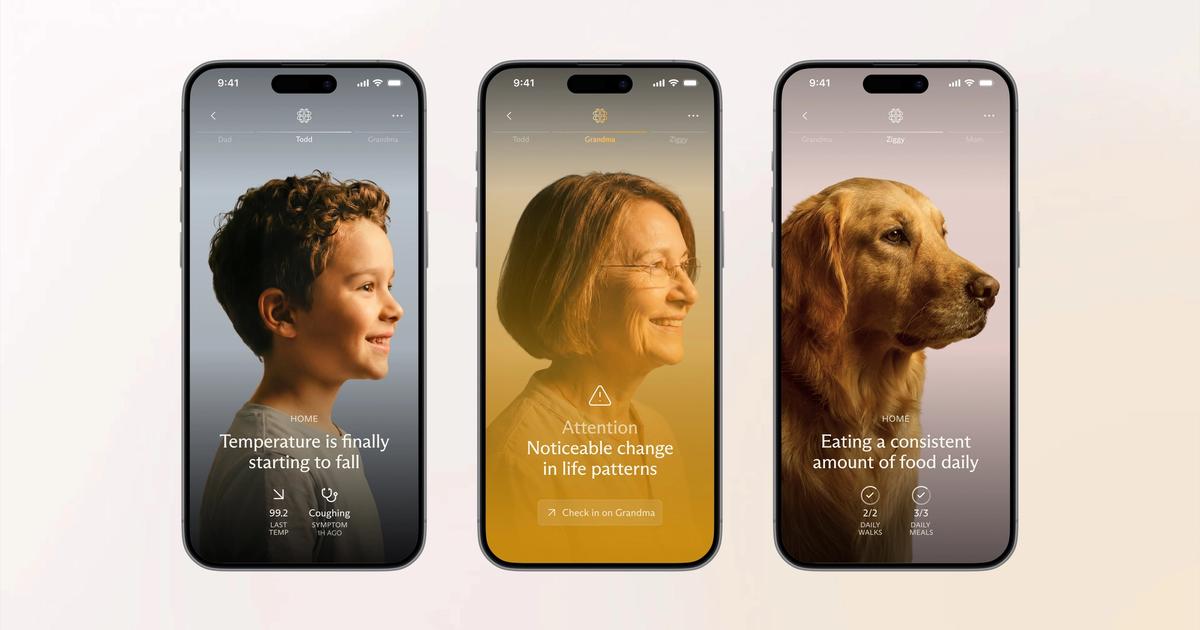

AI health is a logical extension of fitness trackers.

Can CarPlay use Gemini to compete with Google Car?

Tech societies

The new human economy, interesting explorations by Indy.

Weak points in Starlink. These conflicts are sandboxes.

Things change. That is sure.

Communities are everywhere, and wallets can be a binding driving force.

Space-based data centers are a thing? Or a way to boost the value of certain companies?

AI makes chips scarce and influencing pricing of other high intense technologies like phones.

Is this graph representing AI utopia or doom? Or is it not understood well at all?

The role of big tech in ICE and facilitating authoritarianism needs a close watch.

Is vibe coding killing open source? As open source needs contributions from the users of the code. Maybe the agents can be stimulated to do so too? It is a key question; will open source still exist in a reality where teams are build with agents dominantly, and what does this mean for innovative new software development?

Weekly paper to check

(…) we identify three approaches by which authors tend to formulate the moral concerns raised by AI: principles, lived realities, and power structures. These approaches can be viewed as lenses through which authors investigate the field, and which each entail specific theoretical sensitivities, disciplinary traditions, and methodologies, and hence, specific strengths and weaknesses.

Groen, E.L.M., Sharon, T. & Becker, M. An overview of AI ethics: moral concerns through the lens of principles, lived realities and power structures. AI Ethics 6, 121 (2026). https://doi.org/10.1007/s43681-025-00955-7

What’s up for the coming week?

So my week will be taken by the ESConference and Highlight Delft, where Wijkbot will be present, and the rest of the events are great to see. And an event on tooling for the next economy.